Contact Info

133 East Esplanade Ave, North Vancouver, Canada

Expansive data I/O tools

Extensive data management tools

Dataset analysis tools

Extensive data management tools

Data generation tools to increase yields

Top of the line hardware available 24/7

AIEX Deep Learning platform provides you with all the tools necessary for a complete Deep Learning workflow. Everything from data management tools to model traininng and finally deploying the trained models. You can easily transform your visual inspections using the trained models and save on tima and money, increase accuracy and speed.

High-end hardware for real-time 24/7 inferences

transformation in automotive industry

Discover how AI is helping shape the future

Cutting edge, 24/7 on premise inspections

See how AI helps us build safer workspaces

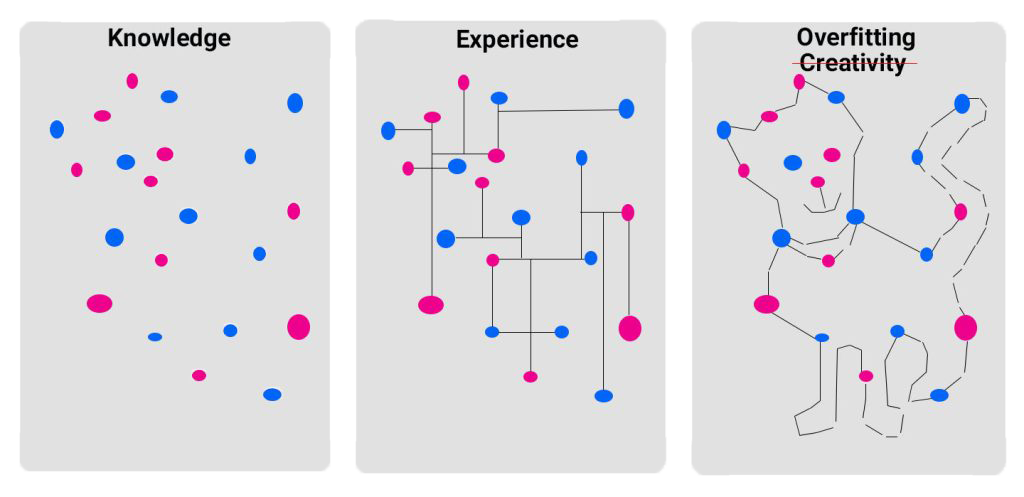

Machine Learning and Deep Learning models use regularization techniques to prevent overfitting. Before delving into the regularization methods, it’s crucial to understand the concept of overfitting.

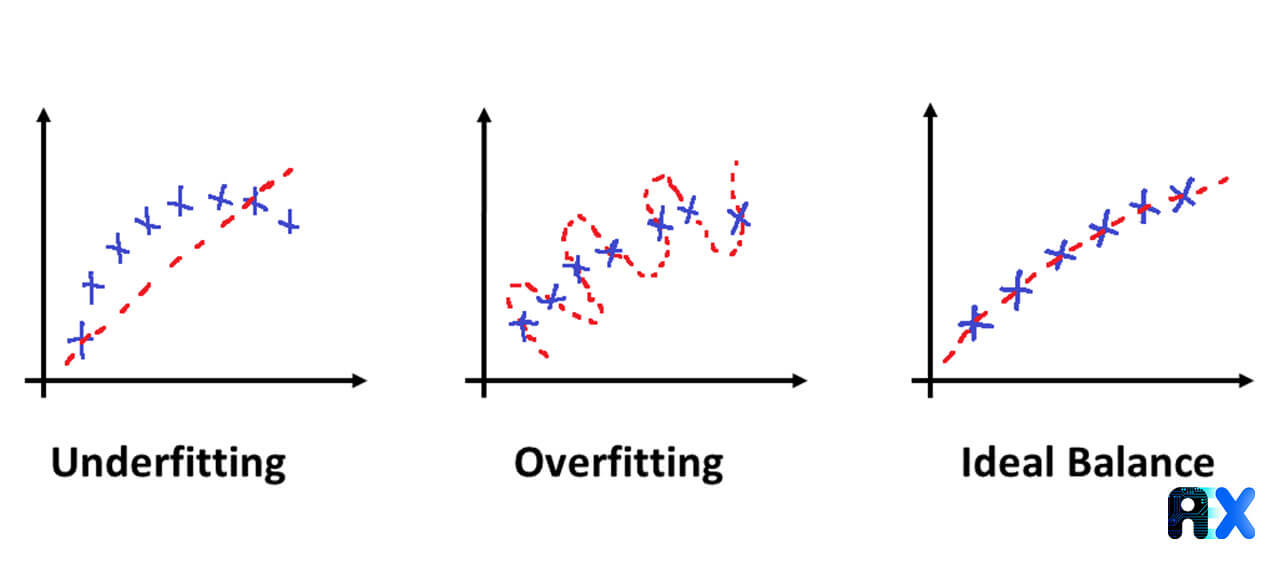

To illustrate, imagine you are preparing for a final exam and your professor has provided you with 100 sample questions. If your preparation is limited to memorizing these 100 questions and you are unable to answer questions that differ slightly from these 100 sample questions, your understanding of the material would be considered overfitted. In the world of algorithms, overfitting occurs when an algorithm is only able to accurately predict the data it has learned from the training set but is unable to accurately predict and classify new data that deviates from the training set. This can be visualized by a graph line that tries to fit the data as accurately as possible but is not suitable for real-world scenarios.

To avoid overfitting, “noise” is added to the model through regularization techniques such as L1, L2, and Dropout. These methods are commonly used in Deep Learning models, such as Artificial Neural Networks (ANNs).

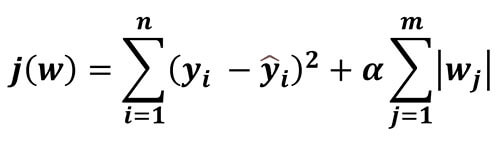

While L1 and L2 are different mathematically, they both serve the purpose of solving the overfitting problem.

L1 regularization adds an L1 penalty, equivalent to the absolute value of the magnitude of the coefficient, which serves to restrict the size of the coefficient. One of the methods that implement this method is Lasso regression. As a result of using L1, the weight value tends to zero. In L1 regularization, regression coefficients are determined by minimizing the L1 loss function as follows:

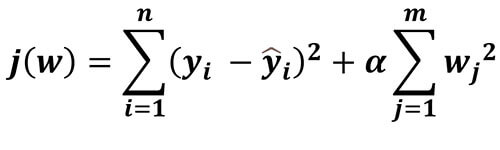

L2 regularization, on the other hand, adds an L2 penalty that is proportional to the square of the magnitude of the coefficients. This method is implemented in algorithms such as Ridge regression and Support Vector Machines (SVMs). Unlike L1 regularization, the weight value does not become zero when using L2 regularization, but it still tends toward zero. The L2 regularization consists in minimizing the L2 loss function, which can be expressed as follows:

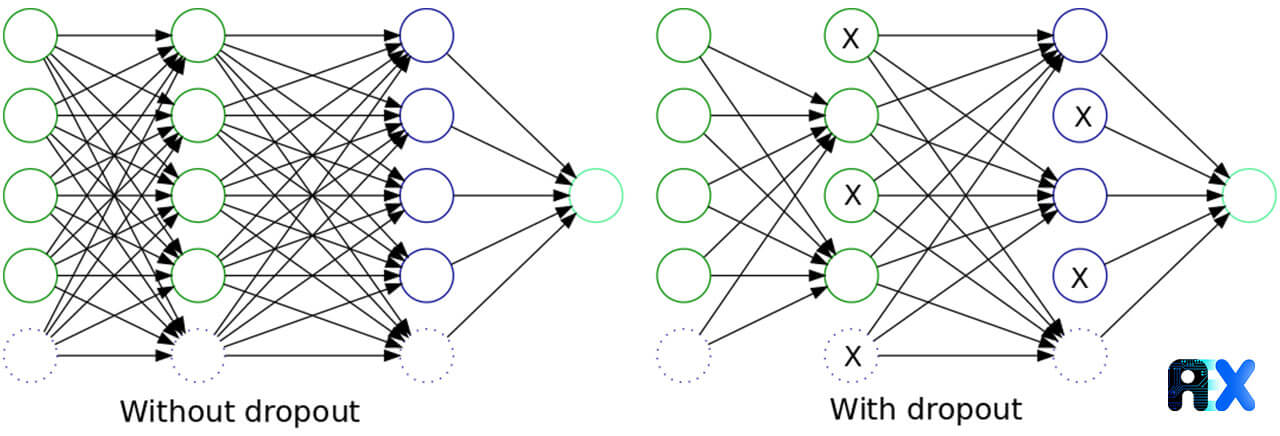

Dropout regularization method works by randomly setting input units to 0 during the training time. By removing nodes from each layer, the Dropout layer is able to release the model from overfitting, as illustrated in the photo below.

You can enter your email address and subscribe to our newsletter and get the latest practical content. You can enter your email address and subscribe to our newsletter.

© 2022 Aiex.ai All Rights Reserved.