Contact Info

133 East Esplanade Ave, North Vancouver, Canada

Expansive data I/O tools

Extensive data management tools

Dataset analysis tools

Extensive data management tools

Data generation tools to increase yields

Top of the line hardware available 24/7

AIEX Deep Learning platform provides you with all the tools necessary for a complete Deep Learning workflow. Everything from data management tools to model traininng and finally deploying the trained models. You can easily transform your visual inspections using the trained models and save on tima and money, increase accuracy and speed.

High-end hardware for real-time 24/7 inferences

transformation in automotive industry

Discover how AI is helping shape the future

Cutting edge, 24/7 on premise inspections

See how AI helps us build safer workspaces

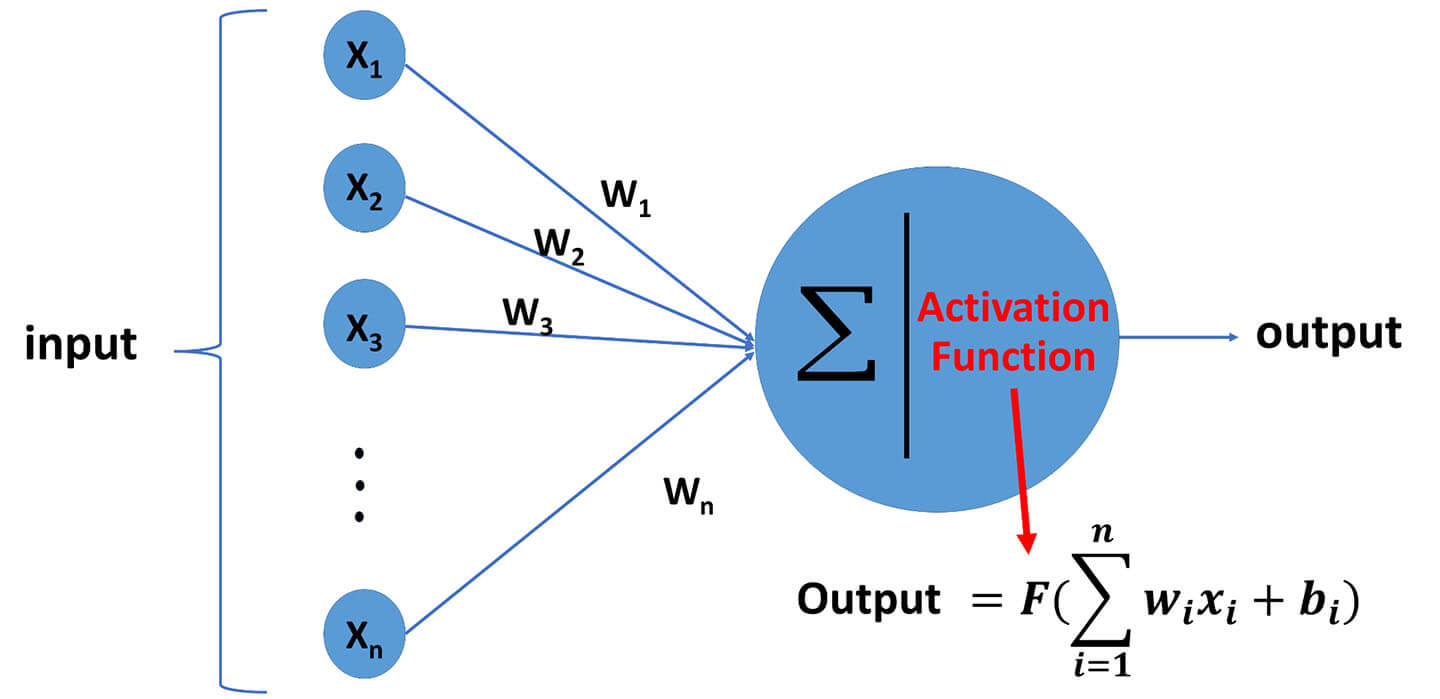

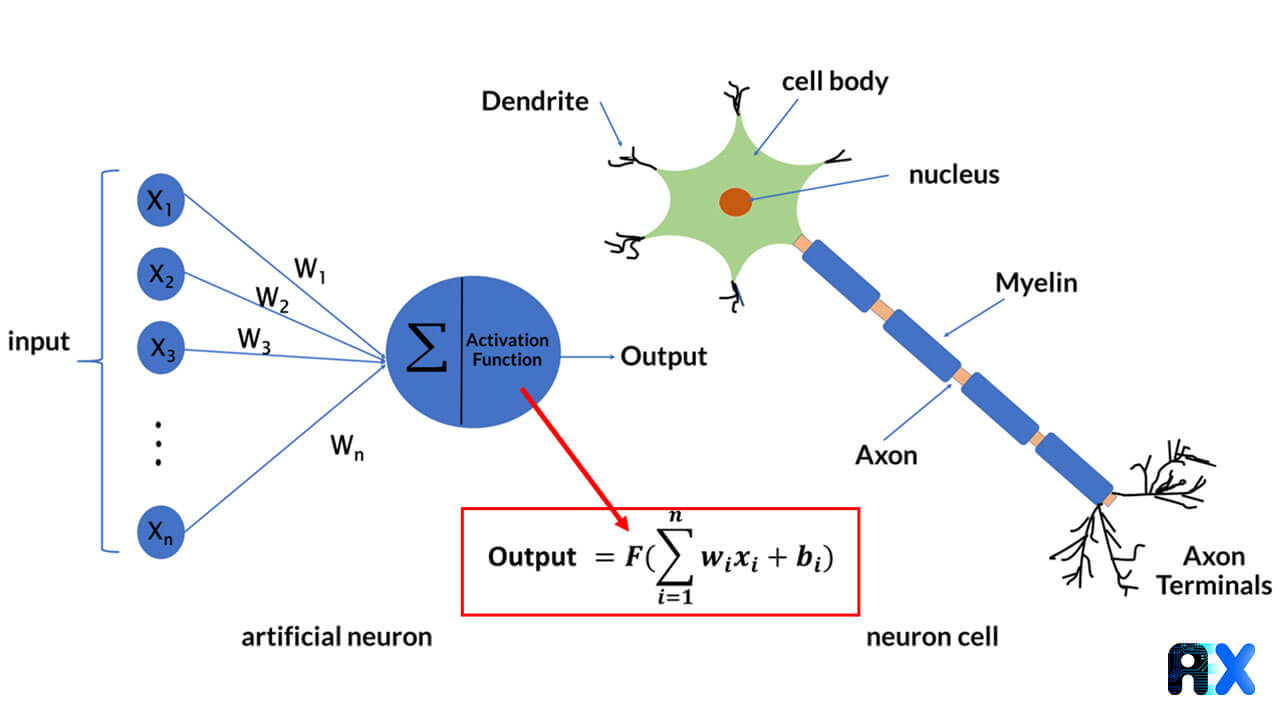

Activation functions are a critical component of neural network nodes. They determine the activity of a node by using simple mathematical calculations in an artificial neuron. In general, an activation function produces an output value based on the sum of the product of the input’s weight. The diagram below illustrates the general representation of both an artificial and biological neuron, including the activation function.

There are several types of activation functions in neural networks, which can be broadly categorized into three groups:

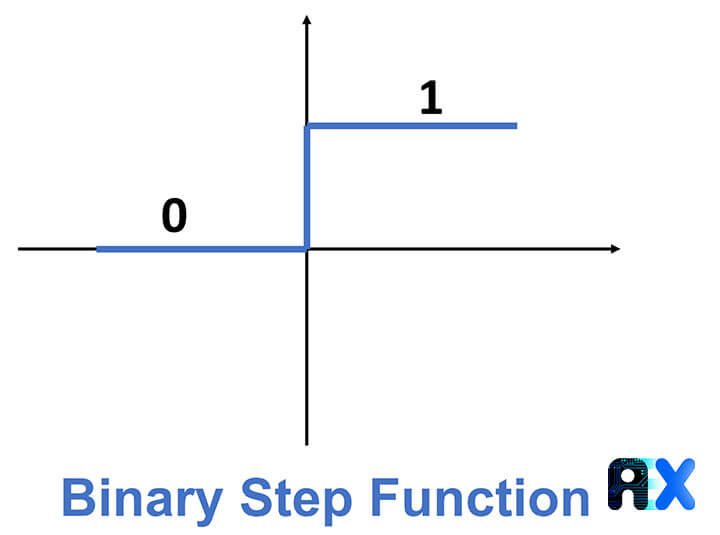

The binary step function utilizes a threshold limit to determine whether a node is active or inactive based on its input. This activation function compares the input to the threshold limit value. If the input exceeds the threshold value, the node is activated and produces an output; otherwise, it remains inactive and its output is zero.

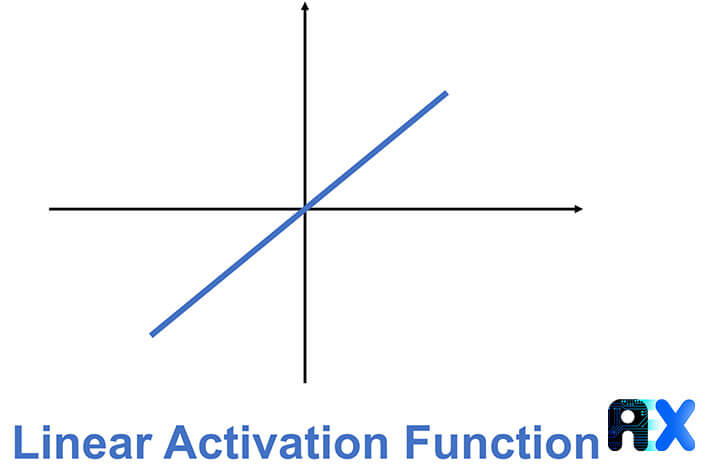

A linear activation function, also known as an “identity function,” performs no calculations and simply passes the input value to the next layer unaltered. This type of activation function in neural networks has a linear output and cannot be restricted to a specific interval. As a result, linear regression models often utilize these types of activation functions.

In neural networks, nonlinear activation functions are the most commonly used types. These functions enhance the generalizability and adaptability of the model to various types of data. Incorporating nonlinear activation functions in neural networks adds an additional step to the calculation of each layer, but it is a crucial step. Without activation functions, nodes in a neural network perform simple linear computations on their inputs based on weights and biases. As a result, combining two linear functions results in a linear function, making the number of hidden layers in the neural network irrelevant, and all layers perform the same function. Therefore, using nonlinear activation functions is vital for training the neural network.

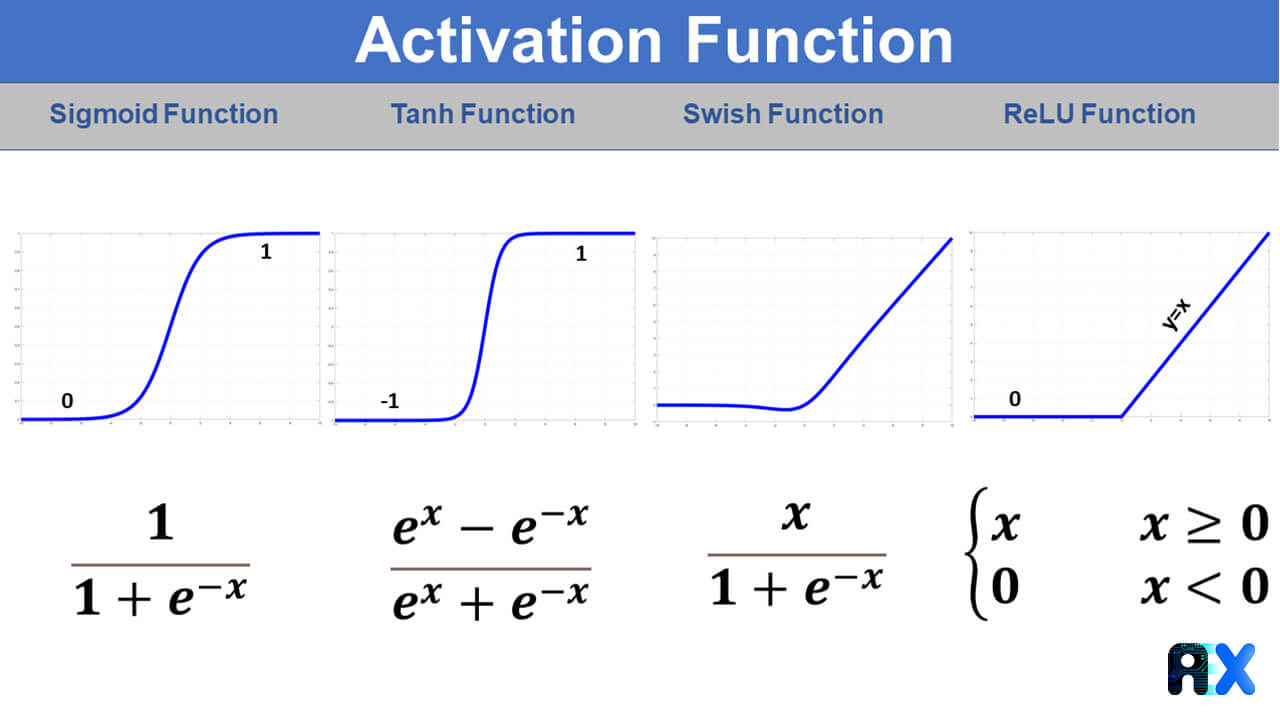

Below are some examples of nonlinear activation functions commonly used in neural networks:

The figure below shows an overview of some important nonlinear activation functions.

You can enter your email address and subscribe to our newsletter and get the latest practical content. You can enter your email address and subscribe to our newsletter.

© 2022 Aiex.ai All Rights Reserved.