Contact Info

133 East Esplanade Ave, North Vancouver, Canada

Expansive data I/O tools

Extensive data management tools

Dataset analysis tools

Extensive data management tools

Data generation tools to increase yields

Top of the line hardware available 24/7

AIEX Deep Learning platform provides you with all the tools necessary for a complete Deep Learning workflow. Everything from data management tools to model traininng and finally deploying the trained models. You can easily transform your visual inspections using the trained models and save on tima and money, increase accuracy and speed.

High-end hardware for real-time 24/7 inferences

transformation in automotive industry

Discover how AI is helping shape the future

Cutting edge, 24/7 on premise inspections

See how AI helps us build safer workspaces

Docker’s unique features and capabilities made it quite popular after its public release in 2013. In this article, we will provide a brief introduction to Docker, and discuss its application in the field of machine learning.

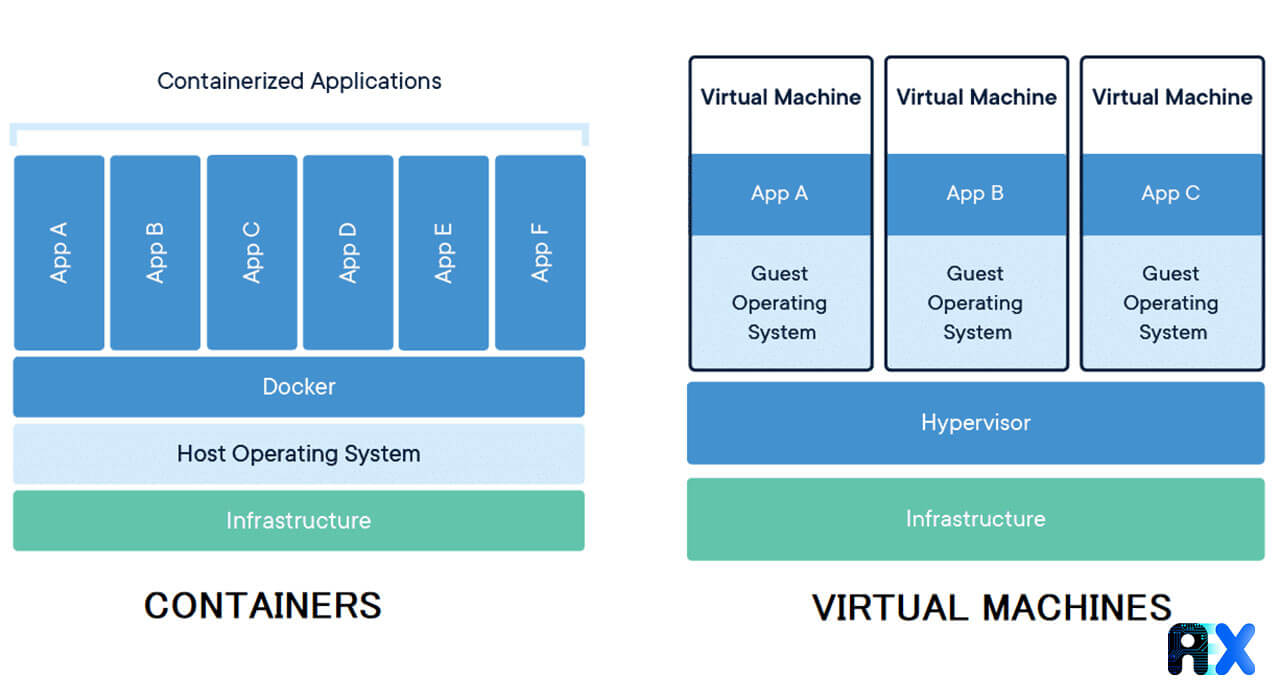

Docker is a widely-used platform that simplifies the construction, deployment, and administration of containerized applications. It has become a de facto standard among software developers and system administrators. The innovative aspect of Docker is its ability to run applications without impacting other parts of the system. As an open-source project, Docker automates the deployment of software applications inside containers by providing an additional layer of abstraction at the application level and automating virtualization. By utilizing Docker, developers can package applications with all their dependencies into a standardized unit for software development. In contrast to virtual machines (VMs), containers have a lower overhead, allowing them to utilize infrastructure resources more efficiently.

Docker creates packaged applications, known as containers. These containers play a crucial role in the Docker ecosystem by allowing for the standardization of software units that include all necessary dependencies. This enables fast and reliable execution of developed applications across a wide range of computing environments. Containers provide isolated environments similar to those found in virtual machines (VMs), but unlike VMs, they do not run a complete operating system. Instead, they share the host’s kernel and perform virtualization at a software level.

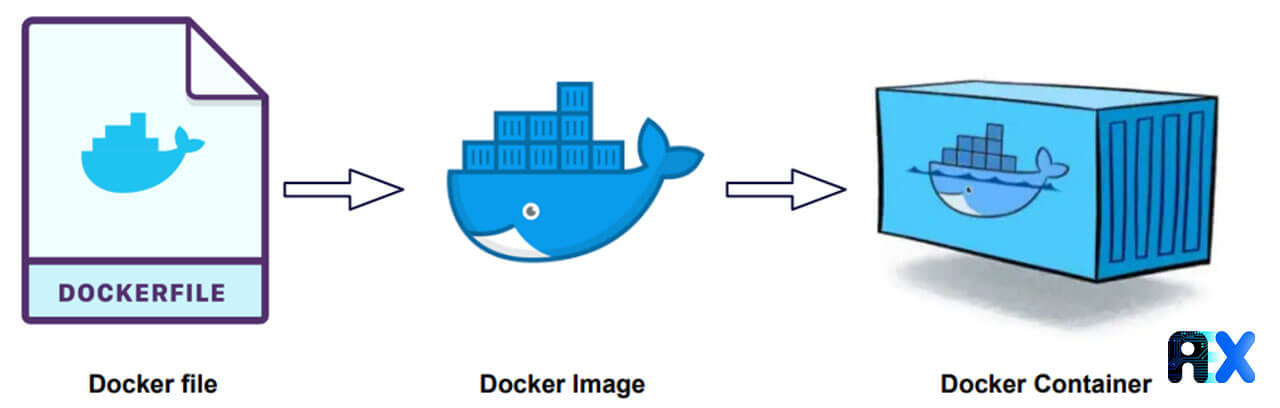

A Docker container image is a lightweight and self-sufficient package of software that includes all the necessary components to run an application: code, runtime, system tools, libraries, and settings. These images are transformed into live containers when run on the Docker Engine. In other words, an image is a blueprint for creating a container at runtime.

As a machine learning engineer, if you have trained a model in a Jupyter notebook that comprises various libraries with different versions, Docker can aid in easily replicating your working environment so that colleagues or others can run the code and reproduce consistent results. Utilizing the same version of libraries, random seeds, and even the operating system, ensures that the results will remain consistent. Furthermore, if you intend to use the same model in a product, Docker can facilitate reproducing the results in a seamless manner.

Another benefit of using Docker for machine learning engineers is the ability to easily move code and dependencies between systems with varying hardware resources. Without Docker, adjusting and preparing a new system for the code to run can be time-consuming and undesirable. Docker allows machine learning engineers to bundle their code and dependencies into containers, which enables the portability of the code to a wide range of computers, regardless of the underlying hardware or operating system. This allows for the immediate initiation of work on a project, once it has been “Dockerized.”

Deployment of machine learning models is typically a complex process, but Docker simplifies it by allowing for the wrapping of models in an API and placement in a container through the use of container management technologies such as Kubernetes. This makes the deployment process more straightforward.

Furthermore, many machine learning engineers utilize Docker to prototype deep learning models on a single GPU and then scale up to use more powerful resources, such as those available on Amazon Web Server. Nvidia-Docker facilitates the quick creation of repositories necessary to run deep learning libraries, such as Tensorflow and Pytorch, on a GPU, making the process more manageable.

You can enter your email address and subscribe to our newsletter and get the latest practical content. You can enter your email address and subscribe to our newsletter.

© 2022 Aiex.ai All Rights Reserved.