Contact Info

133 East Esplanade Ave, North Vancouver, Canada

Expansive data I/O tools

Extensive data management tools

Dataset analysis tools

Extensive data management tools

Data generation tools to increase yields

Top of the line hardware available 24/7

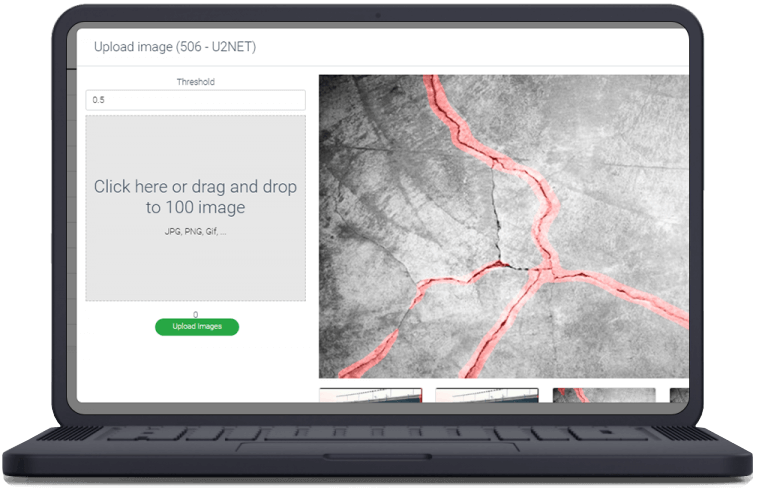

AIEX Deep Learning platform provides you with all the tools necessary for a complete Deep Learning workflow. Everything from data management tools to model traininng and finally deploying the trained models. You can easily transform your visual inspections using the trained models and save on tima and money, increase accuracy and speed.

High-end hardware for real-time 24/7 inferences

transformation in automotive industry

Discover how AI is helping shape the future

Cutting edge, 24/7 on premise inspections

See how AI helps us build safer workspaces

FInding quality data in large numbers often prove to be very challenging for deep learning team. At these times augmentation techniques come into play. Essentially building new data from the data you have already gathered to enlarge the data and halp you with data gathering

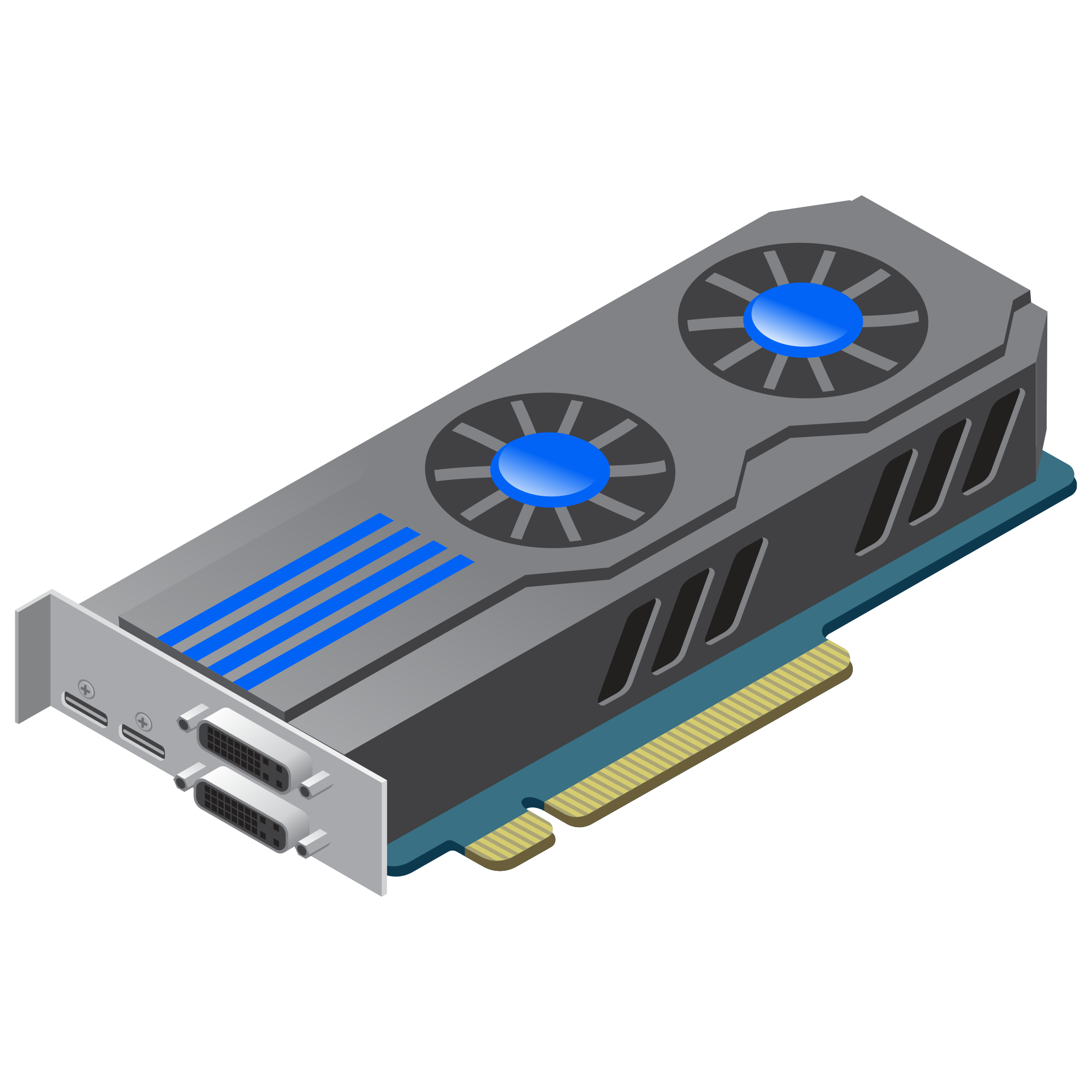

Deploy your models on the most powerful consumer GPUs

Infer in real-time and get results instantly

Don’t to worry about maintenance, infer anytime anywhere

Training a high performing model is only part of the journey. Actually putting the model into use the rest of it.

Deployment can be as tricky as training itself. Not only it requires relatively powerful hardware, but it requires coding to get it up and running

We don’t just settle on good, our infrastructure is built on the most powerful consumer GPUs in the market so you can have the fastest inferences possible.

We made sure to deploy only the best hardware available on the market to make sure that your inferences are processed fast and real-time (time required depends on the model, Inferred image and general system load).

You don’t have to worry about the maintenance or DevOps procedures. Our system are available 24/7 with a projected down time of less than 0.1%. You can infer using APIs for imternational availability.

All the process, from gathering dataset, annotating, training to deployment require no coding and you can start training your models rapidly and deploy them easily.

Use AI and machine learning at the edgeUse AI and machine learning at the edge

Deep learning runs on brute computing force. Trillions of mathematical operations take place in fractions of a second to run computer vision models and this computing power is provided by the hardware, mostly GPU. The faster the hardware get, the more accurate and quick the results will be but powerful hardware is not going to be cheap and maintaining it will be another issue on its own. in AIEX we provide cluster of RTX 3090 GPUs, the top of the line, most powerful GPU in the market to make your experience seamless and quick. You don’t have to pay buckets of money and or worry about the maintenance.

Think about a large company with manufacturing sites spread around the country. They have to cerate and maintain a very costly infrastructure, costing upwards of tens of thousands of dollars just to be able to gather the data from their manufacturing sites and inferring them to their model. With our online, on-cloud solution all you need to do is simply sending dat a over internet to our cloud and we will take care of the rest. We will give you real-time results based on your model 24/7 to anywhere in the world. You don’t need to worry about the infrastructure maintenance or the difficulties of creating an inference network.

Deploy into any public, private or classified software and hardware environment: on any cloud, air-gapped bare-metal or at the edge. Take advantage of edge-optimized model architectures that offer advanced predictive capabilities without taking up a ton of on-device memory. Take advantage of edge-optimized model architectures that offer advanced predictive capabilities without taking up a ton of on-device memory.

© 2022 Aiex.ai All Rights Reserved.