Contact Info

133 East Esplanade Ave, North Vancouver, Canada

Expansive data I/O tools

Extensive data management tools

Dataset analysis tools

Extensive data management tools

Data generation tools to increase yields

Top of the line hardware available 24/7

AIEX Deep Learning platform provides you with all the tools necessary for a complete Deep Learning workflow. Everything from data management tools to model traininng and finally deploying the trained models. You can easily transform your visual inspections using the trained models and save on tima and money, increase accuracy and speed.

High-end hardware for real-time 24/7 inferences

transformation in automotive industry

Discover how AI is helping shape the future

Cutting edge, 24/7 on premise inspections

See how AI helps us build safer workspaces

There are many options for running deep learning applications on cloud providers today. Companies like AWS, Alibaba cloud, Azure, and Huawei all offer a variety of platforms like CPUs, GPUs, and TPUs. Choosing the right platform for your needs requires careful consideration and planning to get the best performance and lowest cost. But, there are some tips you can follow to help you decide which platform is best for your deep learning application. In this article, we’ll give a quick overview of these processors and help you pick the best one for your deep learning needs.

All smart devices, from computers to smartphones to network switches, have a central processing unit (CPU) at their core. A CPU is a general-purpose processor that includes multiple cores and large cache memory, allowing it to run multiple software threads simultaneously. The CPU performs multiple system functions and controls all other components such as memory and graphics cards. In the past, CPUs had only one processing core, but with advancements in technology, they now have multiple cores that enable multi-threading capabilities. The number of cores in modern CPUs ranges from 2 to 64.

The CPU has several key features, including:

Despite the high performance of new CPUs, they are not well-suited for deep learning applications. This is because CPUs process tasks one at a time, making them less efficient for deep learning compared to GPUs. As more data points are used for input and forecasting, the CPU becomes increasingly unable to handle all the associated tasks. However, in deep learning, when all operations are processed simultaneously, models learn more quickly. With thousands of cores, GPUs are well-suited for training deep learning models and are capable of processing multiple parallel tasks up to three times faster than CPUs.

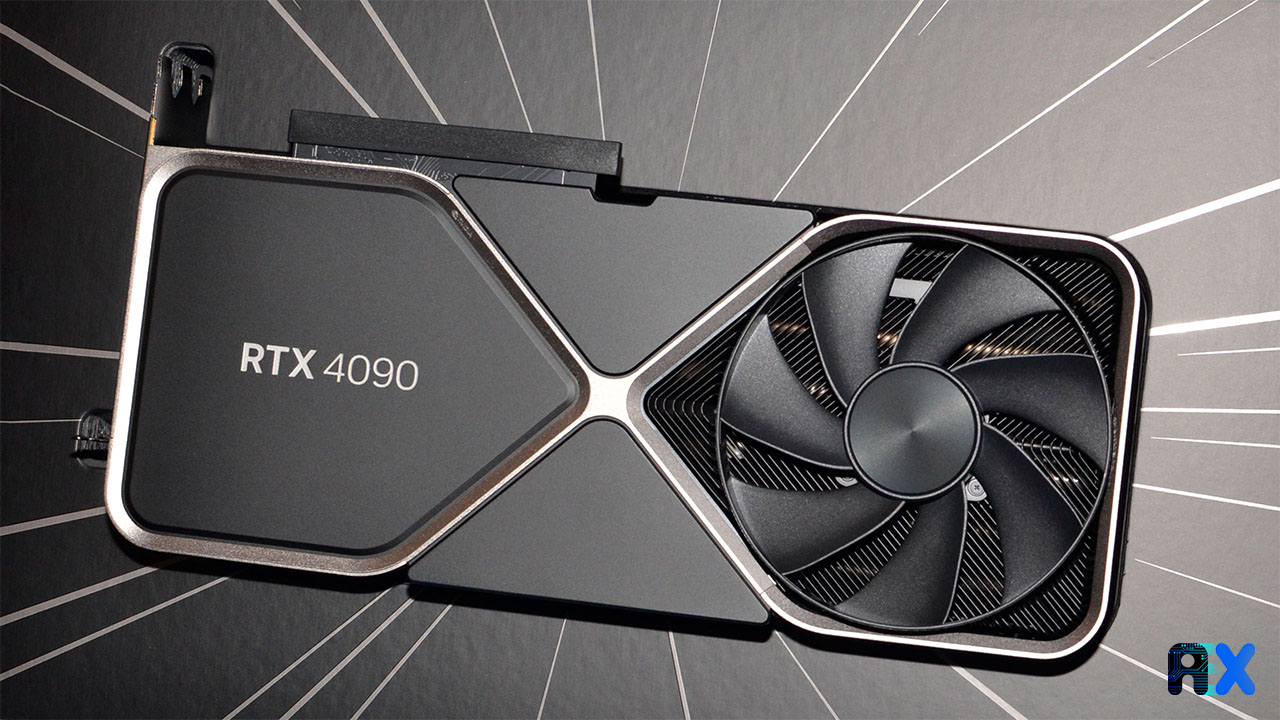

In addition to the CPU, the Graphics Processing Unit (GPU) acts as a performance accelerator. Unlike CPUs, GPUs have thousands or even millions of cores that are capable of breaking down complex problems into thousands or millions of parallel tasks. These thousands of GPU cores are used in parallel computing to optimize various applications such as graphics processing, video rendering, machine learning, and even Bitcoin mining.

The GPU has several key features:

Deep learning is based on neural networks, which have three or more layers. These networks run in parallel, with each task running independently of the others. As a result, GPUs are better suited for processing large amounts of data and complex mathematical computations involved in neural network training.

Tensor Processing Units (TPUs) are specialized hardware for machine learning and deep learning applications. Developed by Google, TPUs have been in use by Google since 2015 and were made available to the public in 2018. Built using TensorFlow, a deep learning platform developed by the Google Brain team, TPUs leverage Google’s expertise in machine learning to deliver performance benefits. TensorFlow provides advanced tools and libraries that allow users to easily build and run machine learning applications.

TPUs are particularly well-suited for deep learning models with large and complex neural networks. They can significantly accelerate the training of these models, making it possible to complete training in hours rather than weeks. While CPUs and GPUs can handle machine learning and deep learning tasks to a certain extent, these tasks are too demanding for a GPU and even more so for a CPU. TPUs, however, take things to a whole new level. As part of the memory subsystem architecture, TPUs manage the data dimensions of N*N data units, allowing them to perform more than one hundred thousand operations per cycle, far more than a CPU or GPU.

AIEX provides a complete computer vision platform that supports different GPUs, which means you don’t have to worry about choosing a processor or coding your models. You can simply select your model and train it on the intended processor using your prepared dataset.

You can enter your email address and subscribe to our newsletter and get the latest practical content. You can enter your email address and subscribe to our newsletter.

© 2022 Aiex.ai All Rights Reserved.