Contact Info

133 East Esplanade Ave, North Vancouver, Canada

Expansive data I/O tools

Extensive data management tools

Dataset analysis tools

Extensive data management tools

Data generation tools to increase yields

Top of the line hardware available 24/7

AIEX Deep Learning platform provides you with all the tools necessary for a complete Deep Learning workflow. Everything from data management tools to model traininng and finally deploying the trained models. You can easily transform your visual inspections using the trained models and save on tima and money, increase accuracy and speed.

High-end hardware for real-time 24/7 inferences

transformation in automotive industry

Discover how AI is helping shape the future

Cutting edge, 24/7 on premise inspections

See how AI helps us build safer workspaces

Ensemble machine learning is a powerful approach that combines multiple weak learning models, also known as base models, to improve the accuracy and stability of predictions. This approach is particularly useful in complex problems where a single model may struggle to capture all the nuances and variations of the data. The combination of weak models can produce more accurate and stable models when they are properly combined. It is important to note that an appropriate ensemble model must have a low bias and variance, both of which are essential and desirable, but often have an inverse relationship. Basic models rarely perform well on their own due to their high bias or variance.

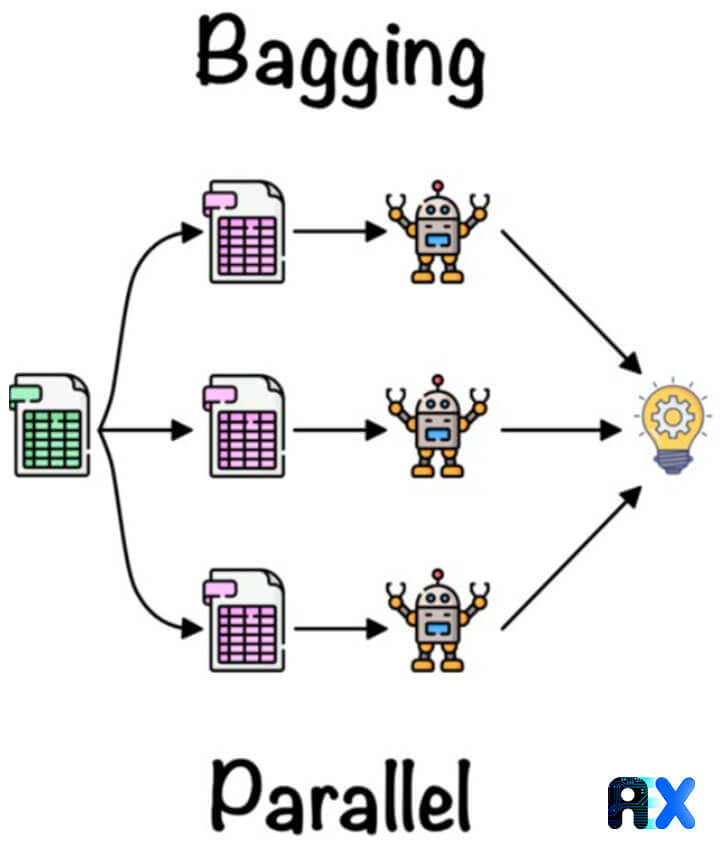

Obtaining good results from ensemble machine learning models requires the right choice of algorithms and the right combination of base models. In many cases, especially bagging and boosting methods, a single basic learning algorithm is used, so we have a number of the same basic models that are trained in different ways. The choice of model depends on several variables in the problem, including the amount of data, the dimensions of the data, etc.

There are three general methods for combining base models in ensemble machine learning:

The Bagging method, also known as Bootstrap Aggregating, is an ensemble machine learning meta-algorithm that utilizes multiple homogeneous base models. These base models learn in parallel and independently from each other. The final predictions are determined by averaging the predictions of all the models. This method is designed to improve the accuracy and stability of statistical classification and regression algorithms by reducing variance and preventing overfitting. Decision trees usually use the bagging method.

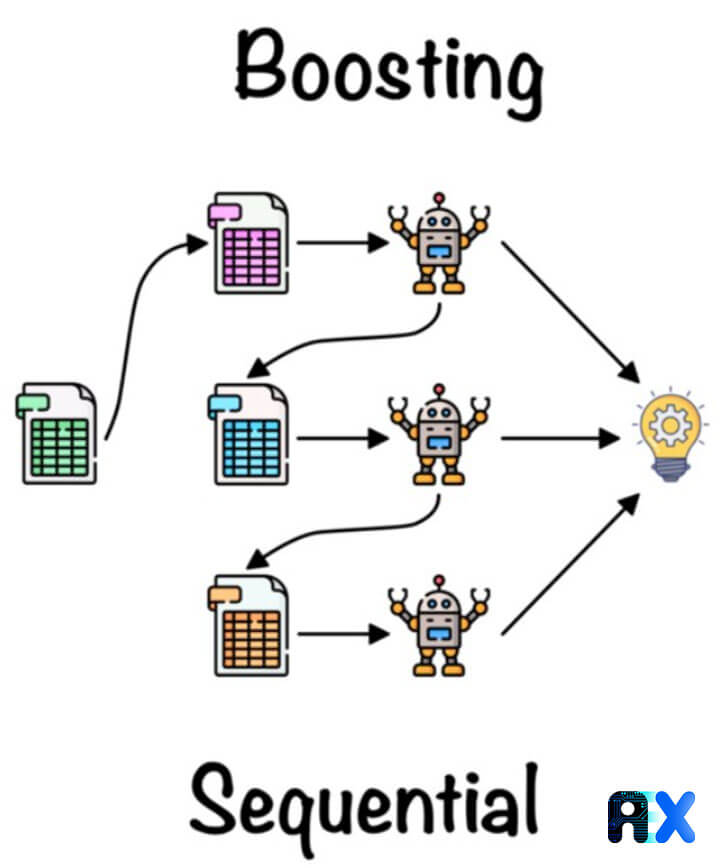

The Boosting method is another ensemble machine learning technique that utilizes multiple weak learning models to build a strong classifier. Unlike the Bagging method, Boosting is a homogeneous method that operates differently by using weak models in series and employs sequential learning to improve model predictions. The first model is built based on the training data and the second model is then created to correct the errors made by the first model. This process is repeated until either the entire training dataset is correctly predicted or a maximum number of models is added.

Some popular types of Boosting algorithms include AdaBoost, Gradient Tree Boosting, and XGBoost.

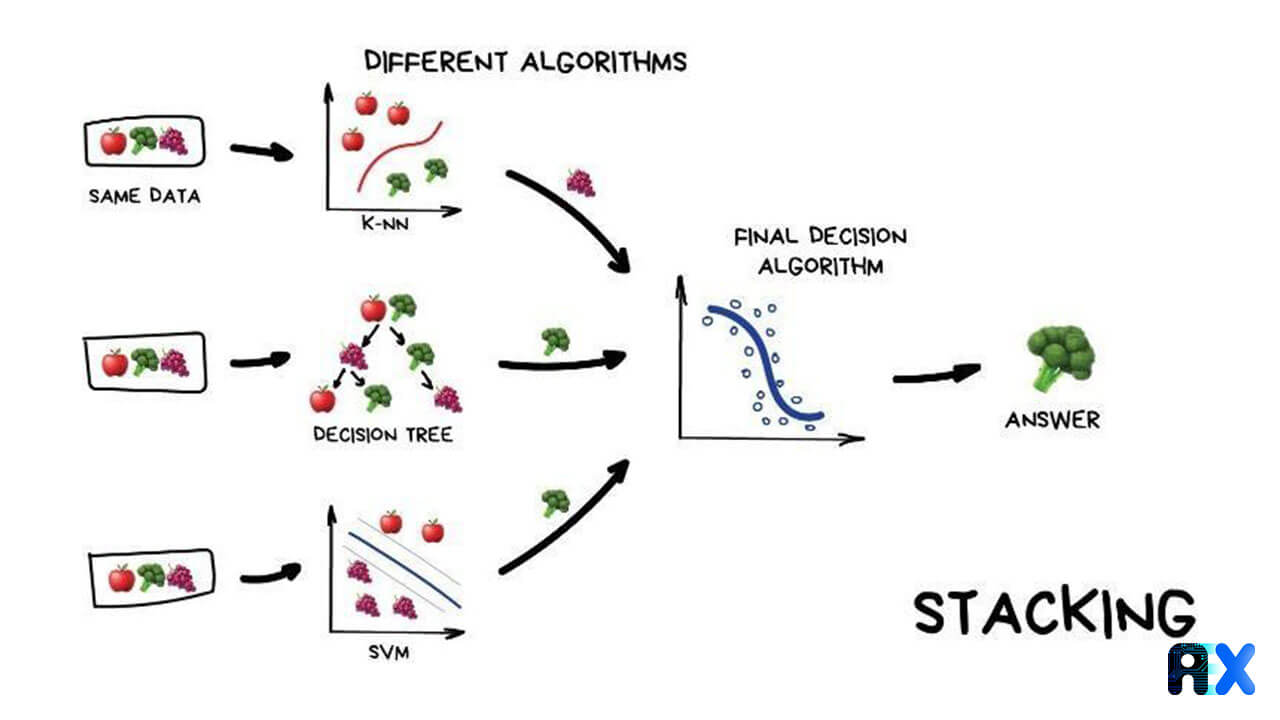

The Stacking method uses heterogeneous base models, trained in parallel, and combines them through meta-learning. In this method, base-level models are trained on the complete training set, and their predictions are used as input features for a meta-model, which is trained to make the final predictions. This approach allows the strengths of different base models to be combined and can lead to further improvements in accuracy over using a single model.

You can enter your email address and subscribe to our newsletter and get the latest practical content. You can enter your email address and subscribe to our newsletter.

© 2022 Aiex.ai All Rights Reserved.