Contact Info

133 East Esplanade Ave, North Vancouver, Canada

Expansive data I/O tools

Extensive data management tools

Dataset analysis tools

Extensive data management tools

Data generation tools to increase yields

Top of the line hardware available 24/7

AIEX Deep Learning platform provides you with all the tools necessary for a complete Deep Learning workflow. Everything from data management tools to model traininng and finally deploying the trained models. You can easily transform your visual inspections using the trained models and save on tima and money, increase accuracy and speed.

High-end hardware for real-time 24/7 inferences

transformation in automotive industry

Discover how AI is helping shape the future

Cutting edge, 24/7 on premise inspections

See how AI helps us build safer workspaces

Every year, an increasing amount of waste, mostly plastics, finds its way into the ocean, endangering marine life and changing ocean ecosystems. In addition to preventing trash from entering the water, another way to reduce water pollution is to collect the existing trash.

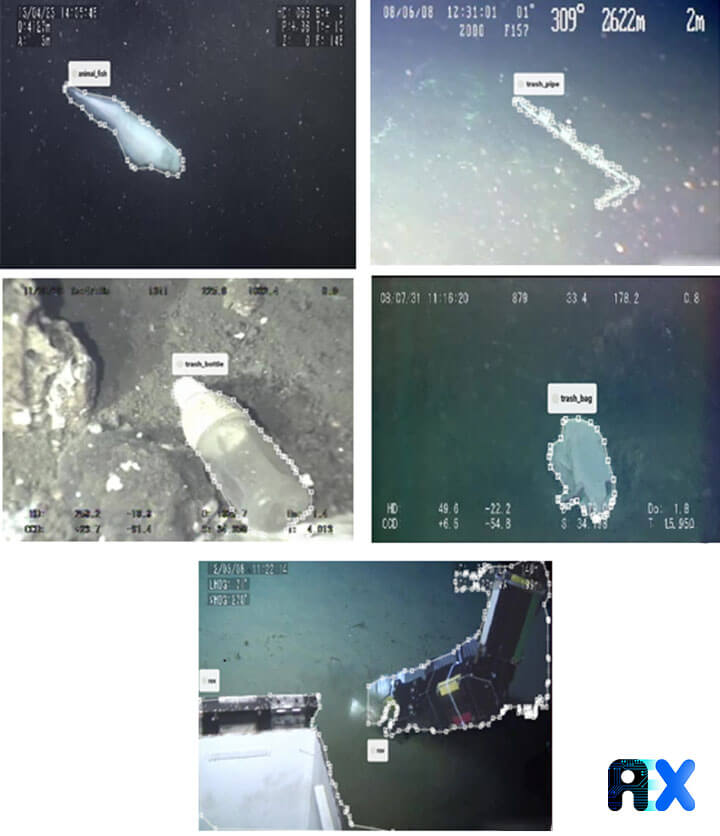

Since trash in the water sinks into the depths over time it is difficult for humans to collect them. We can do this faster and more accurately by training the robots with deep learning methods. In this article, we want to train a deep learning model to identify and segment five categories of objects found underwater: trash bottles, trash bags, trash pipes, ROVs (Remotely operated underwater vehicle), and animal fish.

Sea animals such as fish, turtles, seabirds, and seals may get stuck in the underwater trash, which restricts their movement and even causes wounds on their bodies. It is also possible that they confuse the trash with food and eat it, leading to illness or damage to their internal organs or even resulting in their death.

It should be considered that these problems are not just threatening the aquatic world; other animals and humans who consume seafood are also prone to sickness due to polluted seafood.

Undoubtedly, the issues mentioned above will only get worse unless we take fast and effective actions to solve the problem.

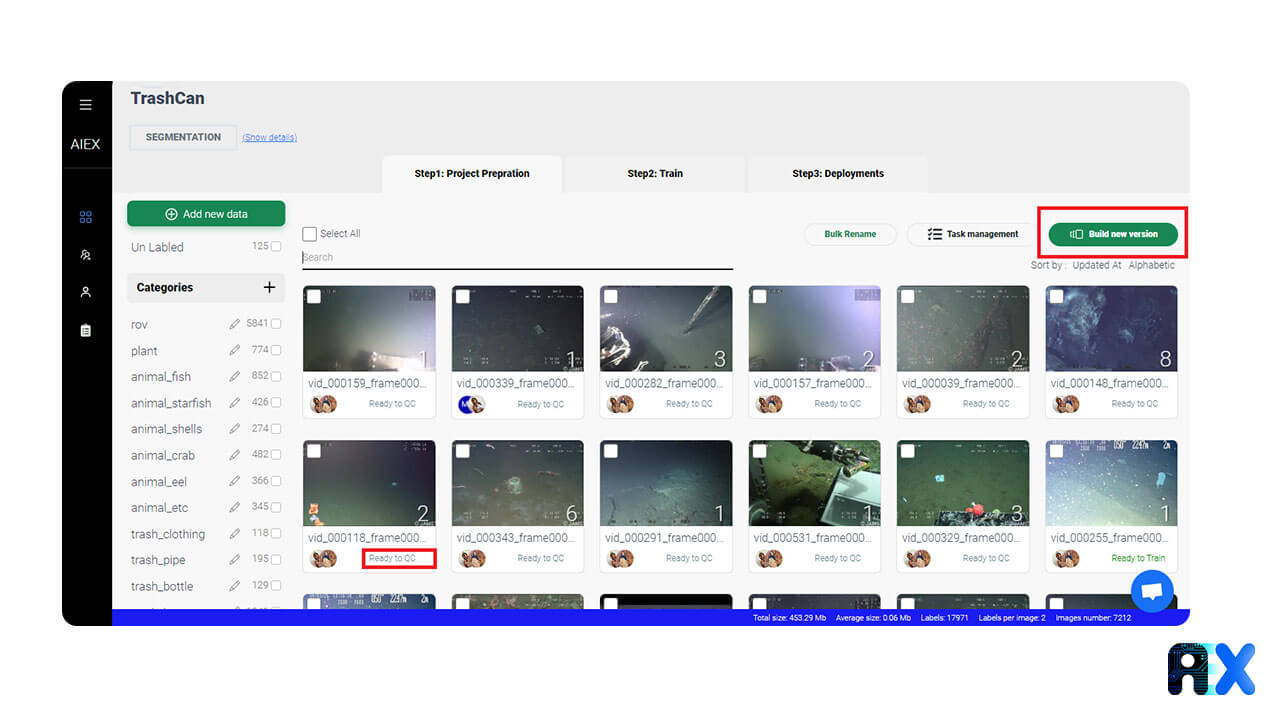

The first step for training deep learning models is collecting data or using an available dataset. This article uses the “TrashCan 1.0: An Instance-Segmentation Labeled Dataset of Trash Observations”. This dataset was curated and published in 2020 by the Japan Agency of Marine-Earth Science and Technology (JAMSTEC). The TrashCan dataset has 7,212 annotated images and contains 22 classes, including a wide variety of undersea flora and fauna, ROV, and trash. However, for simplicity, we will only use five classes in this project.

Since we are using a public dataset we can go ahead and upload the dataset to the AIEX platform. If we were to start our own dataset, we could use the platform’s search engine to find images and annotate them.

After uploading the dataset (this article explains how to upload the dataset), we should verify all annotations and then set the state of all images to “ready to train”. Now we click on “Build new version” to split images into the train, validate and test datasets.

Now we need to select a model and train it to identify segment trash in images. In the AIEX platform, we can select segmentation models from several frameworks, including PyTorch, TensorFlow, and TAO.

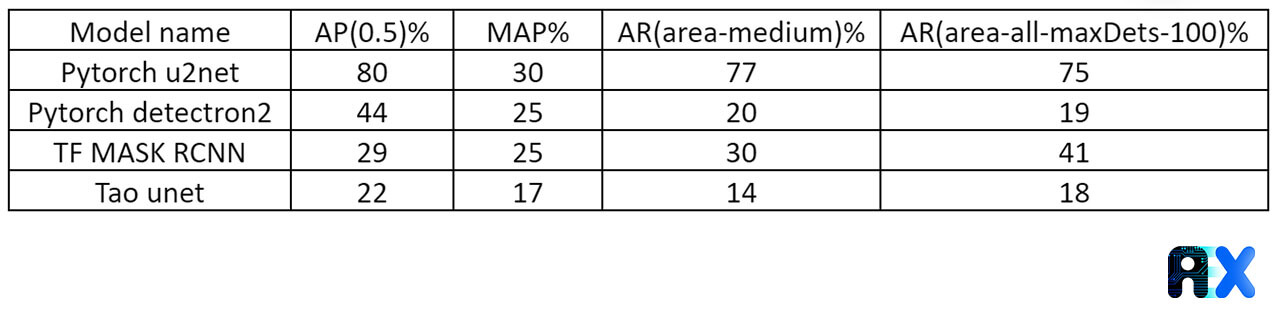

Table 1. Training results on the validation dataset.

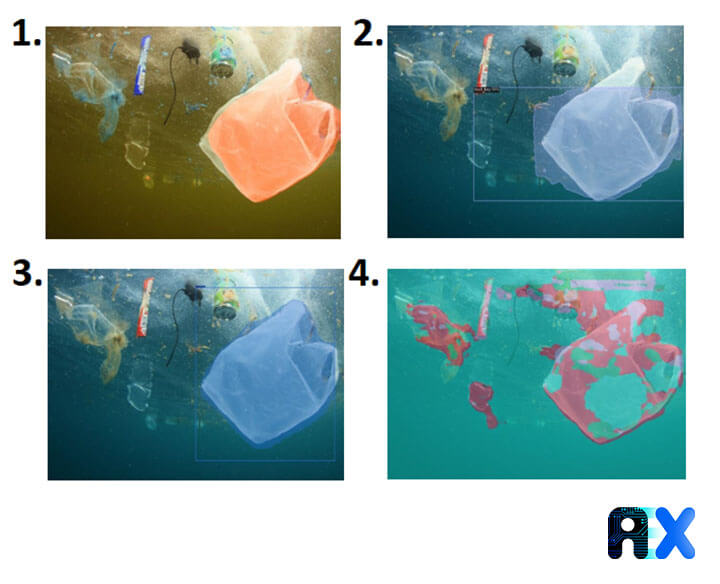

The inference process can determine how well the model has been trained. Here are some of our results.

The underwater trash data set is hard to work with, because underwater images do not have good quality, and more iterations are necessary to achieve high accuracy. Different values should be tested for hyperparameters such as LR, Momentum, and Weight Decay. Nonetheless, we obtained 80% accuracy in the Pytorch u2net model, which performed better than other algorithms designed for this use case.

Unet and U2net models are saliency models, and Classes cannot be distinguished from one another in saliency models. Basically the model identifies the most important or the main object in an image. In other words, we are unable to determine the confidence score for each class, unlike RCNN models which are not saliency models and can output class name and confidence score for each class.

Therefore it can’t be said that u2net was able to achieve the best outcome in this project.

It’s better to compare the PyTorch U2NET and TAO UNET models. PyTorch U2NET was able to achieve better results compared to TAO UNET in this test. We should also compare the PyTorch detectron2 and TensorFlow mask RCNN models together.

In future articles, we’ll explain more about saliency and other kinds of models.

1. https://chinadialogueocean.net/en/pollution/14200-how-does-plastic-pollution-affect-the-ocean/

2. https://conservancy.umn.edu/handle/11299/214865

3. https://detectwaste.ml/post/10-multidatasets-results/

4. https://repurpose.global/blog/post/what-is-the-effect-of-ocean-plastic-on-marine-life

You can enter your email address and subscribe to our newsletter and get the latest practical content. You can enter your email address and subscribe to our newsletter.

© 2022 Aiex.ai All Rights Reserved.