Contact Info

133 East Esplanade Ave, North Vancouver, Canada

Expansive data I/O tools

Extensive data management tools

Dataset analysis tools

Extensive data management tools

Data generation tools to increase yields

Top of the line hardware available 24/7

AIEX Deep Learning platform provides you with all the tools necessary for a complete Deep Learning workflow. Everything from data management tools to model traininng and finally deploying the trained models. You can easily transform your visual inspections using the trained models and save on tima and money, increase accuracy and speed.

High-end hardware for real-time 24/7 inferences

transformation in automotive industry

Discover how AI is helping shape the future

Cutting edge, 24/7 on premise inspections

See how AI helps us build safer workspaces

In the packaging industry, dealing with bottle caps is one of the most challenging tasks when it comes to food quality assurance. Some issues include loosely fitted caps, scratched parts, or broken cap rings. The Quality control (QC) department in a factory has a responsibility to detect such defects before shipping the products. This is a laborious and time-consuming step, even for skilled workers. To automate this procedure in real-time and minimize human errors, artificial intelligence (AI), specifically computer vision (CV), can be exploited. Additionally, the level of liquids inside the bottle can be monitored using such intelligent systems. Intelligent packaging promotes social and practical values as well as high-dimensional data extraction, resulting in more competitive products and higher profits for owners.

Rutwik Kulkarni et. al. from the department of computer engineering at the Pune institute of computer technology, India, presented a conference paper regarding the QC of bottle cap placement using CV in the “12th International Conference on Contemporary Computing (IC3)” held in Noida, India. Using this approach it’s possible to convert visual images into descriptive data and connect them to other processes in the production line.

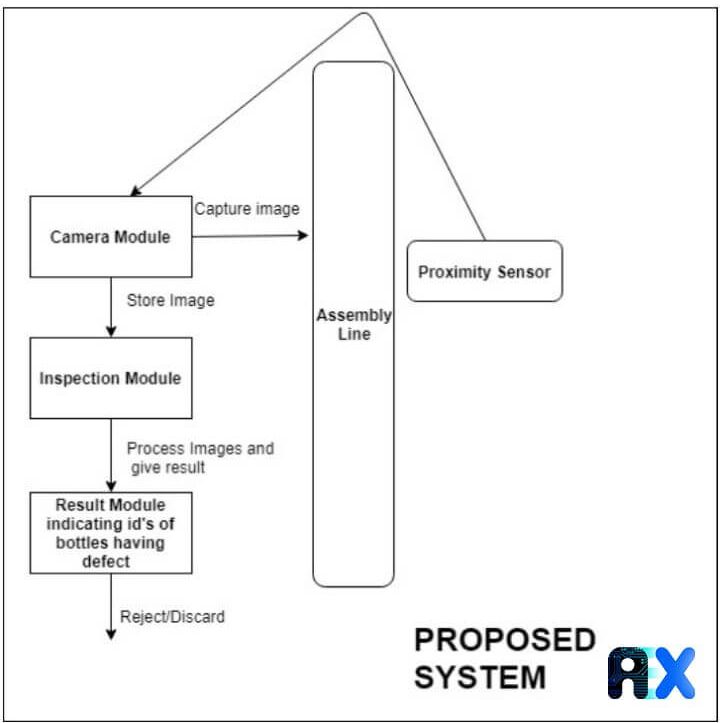

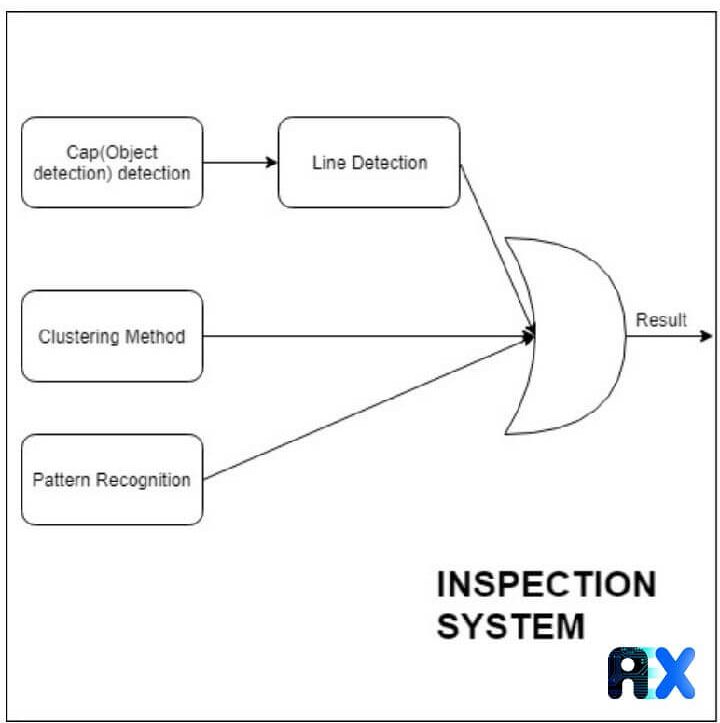

The automated visual inspection system (AVIS) for detecting bottle cap defects consists of four steps including pattern recognition, clustering, and object/line detection. Bottles, caps, liquids, and more can be easily identified using object detection algorithms.

Several research groups have reported leveraging CV-based models for detecting the cap position on bottles. In general, they convert images of bottles into grayscale and utilize a canny edge detection algorithm to extract horizontal lines. The resulting horizontal mask is then used as a reference line; if the height of a bottle is equal to the height of the reference line, it passes QC.

To detect scratches on bottles a pattern matching method is employed. A guided filter approach smooths the edge before a combination of a modified Hough transform and a hierarchical orientation searching approach locates the position and orientation of bottle caps. Finally, scratches can be identified by comparing the rotation of the edge image with the template edge image.

Kulkarni et. al. took advantage of such studies and combined them to develop a state-of-the-art, versatile model with higher accuracy for the online detection of defects in bottles with various shapes, sizes, ingredients, etc.

The following equipment is used to capture images, and train a model:

The benefits of using such a system are:

Synchronizing computation time with actual production speed: The suggested system minimizes computation time by synchronizing with the assembly line.

The authors used the OpenCV library in python as the line detection and clustering algorithms. The TensorFlow library is employed for object detection and convolutional neural networks in Keras Library are applied for the pattern recognition.

K-Means clustering is an algorithm to split the bottle image into many clusters. The cap can be identified by an unsupervised learning algorithm in a distinct cluster. This algorithm works as follows:

1) Reading the image.

2) Applying K-Means clustering to split the image into clusters. For liquids with a similar color to that of the cap, aggressive clustering (i.e., K>20) should be applied.

3) Clustering segments of images with a similar bottle cap color.

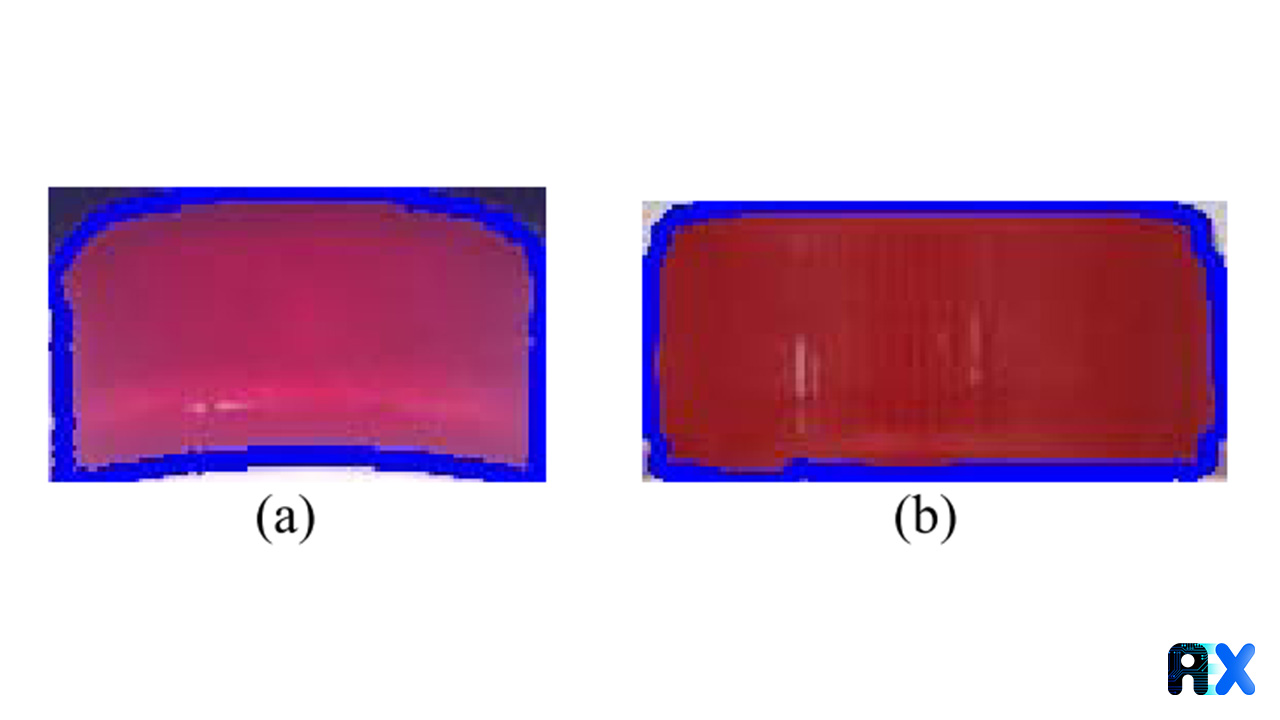

4) Converting image into grayscale and defining contour lines to find the most connected clusters. In the case of a well-packed bottle, this cluster includes the ring below the cap.

5) Any displacement of the cap fitting is recognized by the contour line shape and the bounding box.

6) The well-packed cap exhibits a higher area than that of a misfitted cap (Figure 3).

The authors use a supervised learning algorithm based on a neural network for the segmentation of the bottle cap image. A single shot multibox detector (SSD) is employed for the cap detection. The feature maps can be extracted using the SSD before detecting the bottle cap by convolutional filters. This algorithm provides better performance compared to other object detection algorithms, the authors claimed.

The line detection module can examine whether the cap is correctly fitted or not. To achieve that, the following steps should be followed:

As mentioned above, a CNN algorithm is used by authors for pattern recognition in both correctly and incorrectly packaged bottles. Different filters are applied to the labeled data in order to extract features. An adaptive thresholding algorithm is employed to identify the rings, which makes the algorithm insensitive to the color differences and helps it focus on the differences in the bottle cap position.

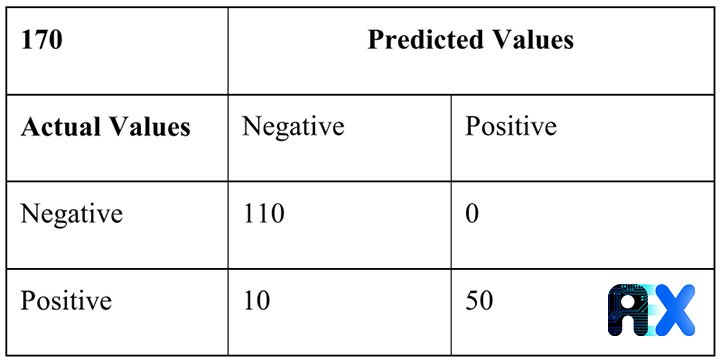

The performance of the model is evaluated on 170 threshold images. 400 bottle images were used to train the model. Table 1 lists the test result of the model. Evidently, the model works quite well in picking out defective bottles.

Table 1. The confusion matrix for the trained model

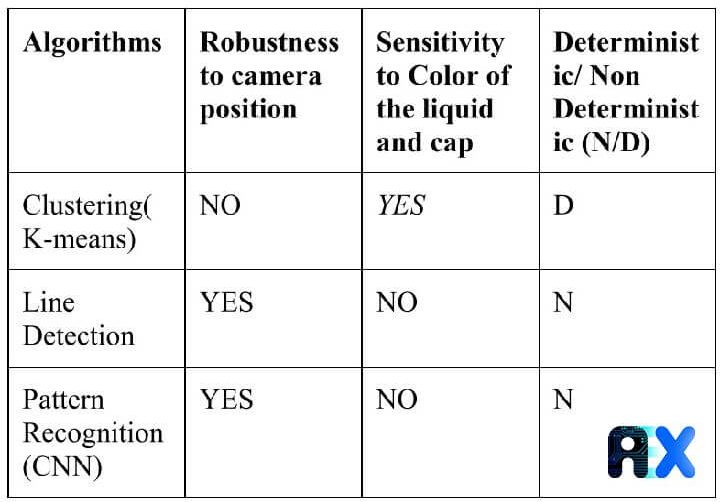

Furthermore, the authors compared various algorithms’ performance regarding the robustness to the camera position, sensitivity to the liquid color, and the ability of cap detection. The results are represented in Table 2. The K-Means clustering algorithm demonstrates better performance compared to others.

Table 2. Comparison of the various algorithms

The proposed model can precisely detect the displacement of bottle caps in real time and send a signal to robots to automatically discard the defective bottles. Although the authors only trained a model for bottle cap detection, a similar approach can be implemented to detect other defects like low liquid levels, floating components in the liquid, distortion, labeling faults, etc. Therefore, the authors’ next goal is to create an all-in-one quality assurance system for the beverage industry.

You can enter your email address and subscribe to our newsletter and get the latest practical content. You can enter your email address and subscribe to our newsletter.

© 2022 Aiex.ai All Rights Reserved.