Contact Info

133 East Esplanade Ave, North Vancouver, Canada

Expansive data I/O tools

Extensive data management tools

Dataset analysis tools

Extensive data management tools

Data generation tools to increase yields

Top of the line hardware available 24/7

AIEX Deep Learning platform provides you with all the tools necessary for a complete Deep Learning workflow. Everything from data management tools to model traininng and finally deploying the trained models. You can easily transform your visual inspections using the trained models and save on tima and money, increase accuracy and speed.

High-end hardware for real-time 24/7 inferences

transformation in automotive industry

Discover how AI is helping shape the future

Cutting edge, 24/7 on premise inspections

See how AI helps us build safer workspaces

The applications of artificial intelligence (AI), specifically computer vision (CV), in auto manufacturing processes are constantly increasing. The largest car companies in Germany are employing intelligent machines and robots in production lines and also to generate advertisements and sales concepts. Some car companies use Computer-generated imagery (CGI) to offer potential customers the possibility of designing their ideal car online and adding their desired options to it in real-time. This novel opportunity creates some difficulties in the human-led quality assurance (QA) of the output renders, since visually inspecting complex mass-generated images is quite challenging. This drawback is the result of the dependency of supervised machine learning (ML) on a massive, high-quality, labeled dataset.

Although accessibility to public datasets has significantly increased during the past years, image annotation is time-consuming and costly as it often requires highly trained staff. To address these issues, several approaches have been suggested to make data labeling easier, including transfer learning, multi-task learning, few-shot learning, meta-learning, or active ML (AL).

The main idea of AL is to train a model with a remarkably smaller training dataset containing the most informative samples that have been carefully picked according to the learning process. First, a model is trained with a small, labeled dataset. Then, an acquisition function carefully chooses a subset of data from the data pool in each AL round which is then labeled by an expert. Finally, the labeled dataset is inserted into the labeled data pool until a specific termination criterion is met.

Jakob Schöffer et al. from the Karlsruhe Institute of Technology in Germany presented an AL algorithm for automatic QA (ALQA) of the CGI content production. The suggested QA system needs a considerably smaller, labeled dataset to detect defects in virtual car renderings. Taking advantage of such systems helps auto manufacturers promote their customer service and increase profits.

The research method utilized by the authors consists of three major cycles of investigation: a relevance cycle to define the practical problem, a rigor cycle to outline the existing knowledge base, and one or two design cycles to represent and evaluate the research object. Each cycle is described below.

Auto manufacturers are increasingly using the CGI of their products for advertisement purposes. They create digital 3D models of their fleet including optional configurations available to customers on the aftermarket. This Technology provides customers with the possibility of viewing their ideal vehicle configuration before deciding to purchase it. Nevertheless, creating and constantly updating acceptable 3D models of all vehicles and all their associated optional configurations without defects is a challenging task. Some challenges in creating these 3D models include wiring errors in the individual model components or missing black areas. To solve such issues, defects must first be identified, which is currently implemented as a step in manual random checks according to the authors’ claim.

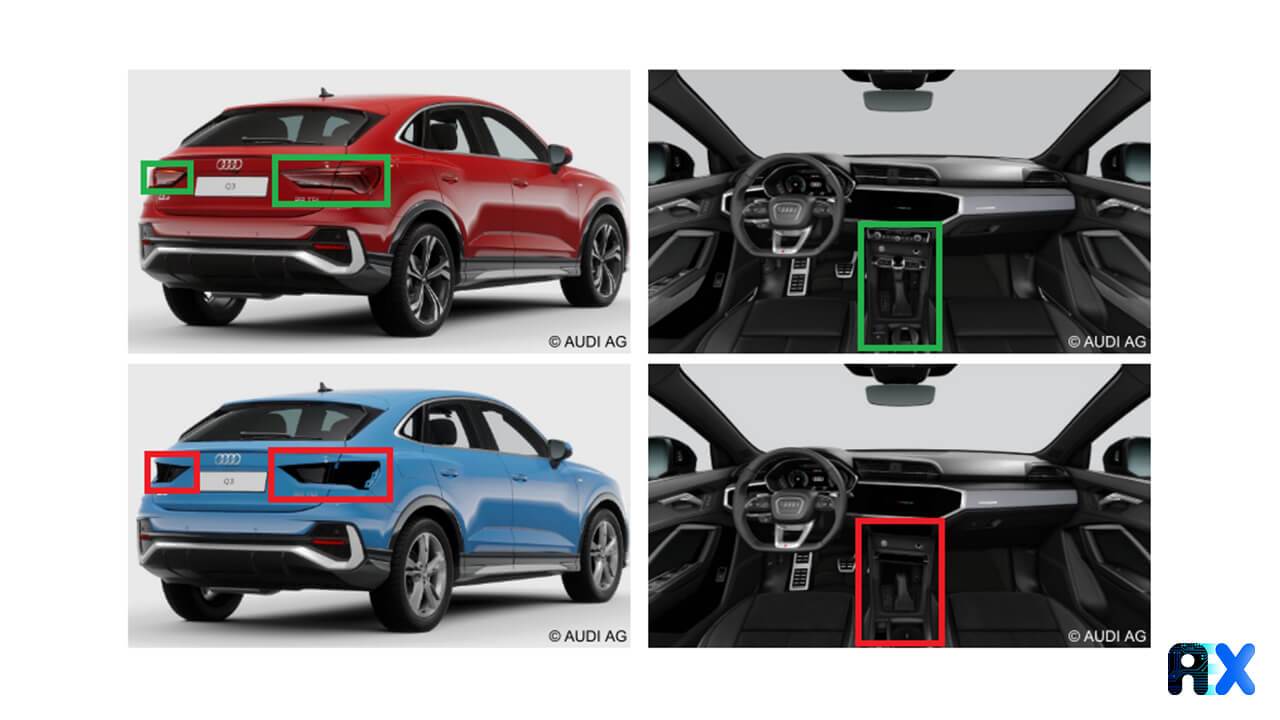

An example of such defects is depicted in Figure 1. In the exterior rear view (the left image), the taillights are missing (highlighted by a red box in the lower image). Additionally, in the interior front view (the right image), the upper part of the gear stick is not rendered (highlighted by a red box in the lower image).

First, the team designs an ALQA system to detect defects in car renderings using the kernel theory of AL. Since the goal is to reduce the number of labeled images required for supervised ML, they select relevant theories from the knowledge base to be incorporated into design principles (DPs).

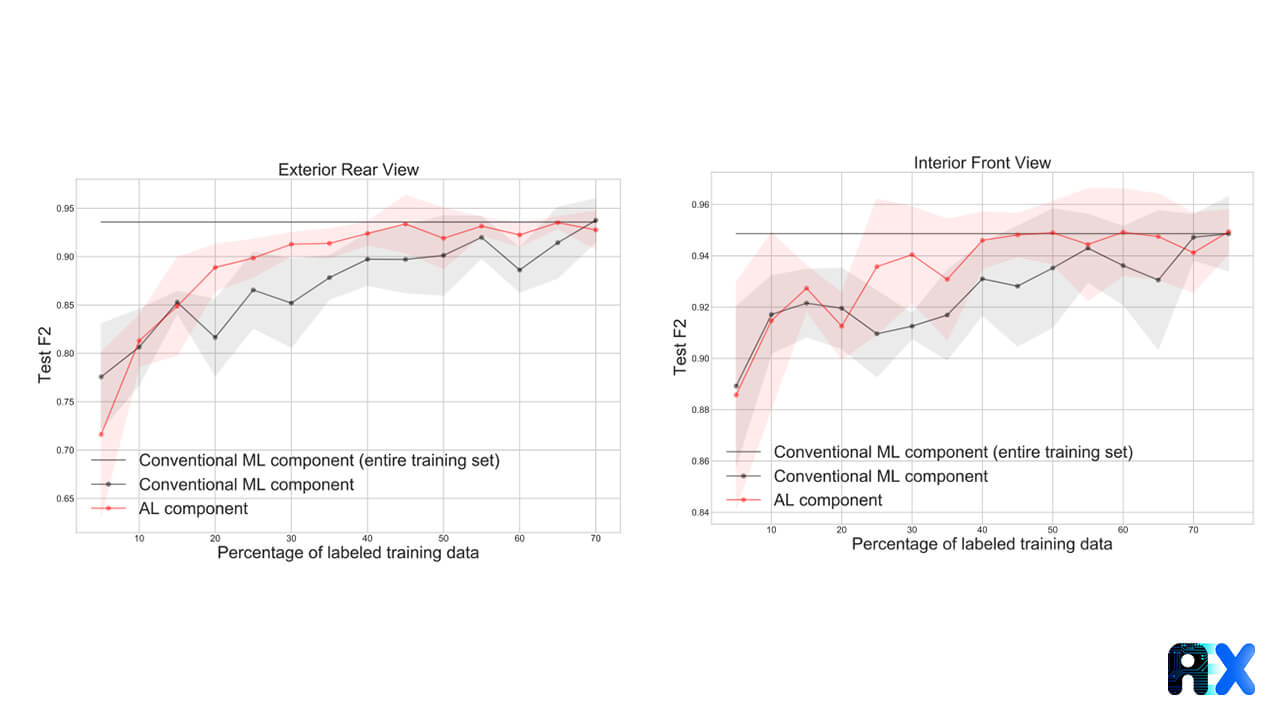

By utilizing AL, they select a subgroup of the dataset that is responsible for the highest contribution in the training process (DP1). This step substantially improves the model’s learning speed(DP2). For model evaluation, the F2 metric (Equation (1)) is employed, as it focuses on both recall and precision (DP3). The Human-in-the-loop theory incorporates human interactions into the decision processes performed by ML models. Using this theory, QA managers get direct feedback in case of errors (DP4).

The authors focus on QA in two areas including CGI and AL. The following sections discuss both in more detail.

Authors report that research focused on defect detection in CGI is scarce. The most similar research has been conducted about video game streaming; Nonetheless, they claim there is no research about QA in CGI in an industrial context before their work.

Generally speaking, AL algorithms include two main categories: generative and pool-based approaches. Generative algorithms use generative adversarial networks (GANs) to augment training datasets. On the other hand, pool-based algorithms employ different acquisition strategies to select a subgroup of datasets with the most informative data. The authors found that the pool-based approach is the most appropriate one in this case. The assume the more uncertain a model is, the more informative the associated data point must be.

The evaluation of AL components in the model is the key parameter in this study. Through this evaluation, authors answered some questions such as:

Is the ALQA performance comparable to conventional ML approaches while using fewer labeled datasets?

Is the classification performance of ALQA suitable for real-life applications?

Since the only source of error is the AL component, the human-in-the-loop component is not evaluated.

Schöffer et al. choose a 3D model of the Audi Q3 Sportback to evaluate their trained model. However, ML techniques are still unable to directly use 3D models. Hence, they use 2D images for model evaluation. Additionally, to reduce the computation costs they randomly select a subset of 4,000 images from the customers’ order pool. They assign 2,000 images from each camera angle to the training dataset, 90% of the remaining images to the test dataset, and the rest to the validation dataset.

After training a model with a small, labeled dataset, new datasets are chosen by the acquisition function in each AL round, which are then labeled by an expert, and inserted into the pool of labeled datasets. This procedure continues until a predefined termination criterion is met. They applied a convolutional neural network (CNN) model using the modified ResNet-18 architecture and the acquisition function for image classification.

The resolution of all images is fixed at 128×128 pixels. For each camera perspective, a small, labeled dataset including 100 randomly selected images is used during each AL round. The learning rate is set at 5*10−4. Afterward, the model is re-trained from scratch. Each experiment is repeated five times and the F2 metric (Equation (1)) with standard deviation is calculated.

Figure 2 displays the results for two camera perspectives as a function of the percent of the labeled training dataset. The black horizontal lines represent the conventional ML component performance. F2 scores of 93.58% and 94.86% can be achieved for the exterior rear view and interior front view, respectively while reducing the labeling overhead by 35% on average. For both camera perspectives, the AL component reaches the upper bound in terms of the F2 score using significantly smaller training datasets.

This approach can be extended to the entire vehicle fleet and the features extracted from 3D models. However, the scope of this paper is limited to one vehicle model, namely the Audi Q3 Sportback, and can be employed as a supporting tool for quality assurance. Authors do not claim to automate the entire quality assurance process as the model does not support dynamic car model functionalities, e.g. the opening of doors and trunk.

You can enter your email address and subscribe to our newsletter and get the latest practical content. You can enter your email address and subscribe to our newsletter.

© 2022 Aiex.ai All Rights Reserved.