Contact Info

133 East Esplanade Ave, North Vancouver, Canada

Expansive data I/O tools

Extensive data management tools

Dataset analysis tools

Extensive data management tools

Data generation tools to increase yields

Top of the line hardware available 24/7

AIEX Deep Learning platform provides you with all the tools necessary for a complete Deep Learning workflow. Everything from data management tools to model traininng and finally deploying the trained models. You can easily transform your visual inspections using the trained models and save on tima and money, increase accuracy and speed.

High-end hardware for real-time 24/7 inferences

transformation in automotive industry

Discover how AI is helping shape the future

Cutting edge, 24/7 on premise inspections

See how AI helps us build safer workspaces

The production of photogravure cylinders is often hindered by defects such as holes in the printing cylinder. Detecting these defects with a rapid, accurate, and automated method can effectively decrease QC costs. Villalba-Diez et al. from the Heilbronn University of Applied Sciences devised a deep neural network (DNN) soft sensor, in collaboration with other institutes from Germany and Spain, for an automatic QC system in printing processes. The authors’ goals for this research were to predict the errors of a gravure cylinder, facilitate human operation, and autonomously evaluate product quality without human involvement.

The cyber-physical infrastructures emerge from the Industrial Internet of Things (IIoT) and data science. Developed countries such as the United States, China, Germany, and Japan have launched various AI programs to advance manufacturing processes. Toyota’s implementation of intelligent autonomation, or JIDOKA, which works based on IIoT, is a good example. These machines can recognize their conditions using intelligent sensors and are capable of identifying the specific needs of customers; as a result, they can react flexibly and appropriately. Integrating this technology into production processes would improve the automation level, QC accuracy, and customization level as well as enhance the value stream performance.

Optical quality control (OQC) has long been applied to manufacturing processes in order to meet customers’ demands. However, the human-centered approach to OQC is limited by human ergonomics, fatigue, and the high cost of employment and cannot satisfy the necessary requirements. Therefore, automatic AI-enabled detection, segmentation, and classification of visual defects appears to be the ultimate industrial solution. On the other hand, the conditions in which images are acquired, such as lighting, size, shape, and orientation of products affect the CV defect detection process. As a result, CV models often fail to function in the real world and on samples with rough textures, or complex and noisy sensor data. This creates a demand for more robust, sophisticated, and reliable models to identify defects in the real world.

Laser engraving of rotogravure cylinders is a state-of-the-art technology that is still susceptible to defects including dents, scratches, inclusions, spray, curves, offset, smearing and excessive, pale or missing printing, and color errors. Since different errors and noise levels are present in industrial settings, training a CV-based model to automatically and precisely detect defects and errors seems impossible. In practice, about 30% of the possible errors can be detected by an intelligent machine, which increases both the costs of OQC and the lead time of the overall process.

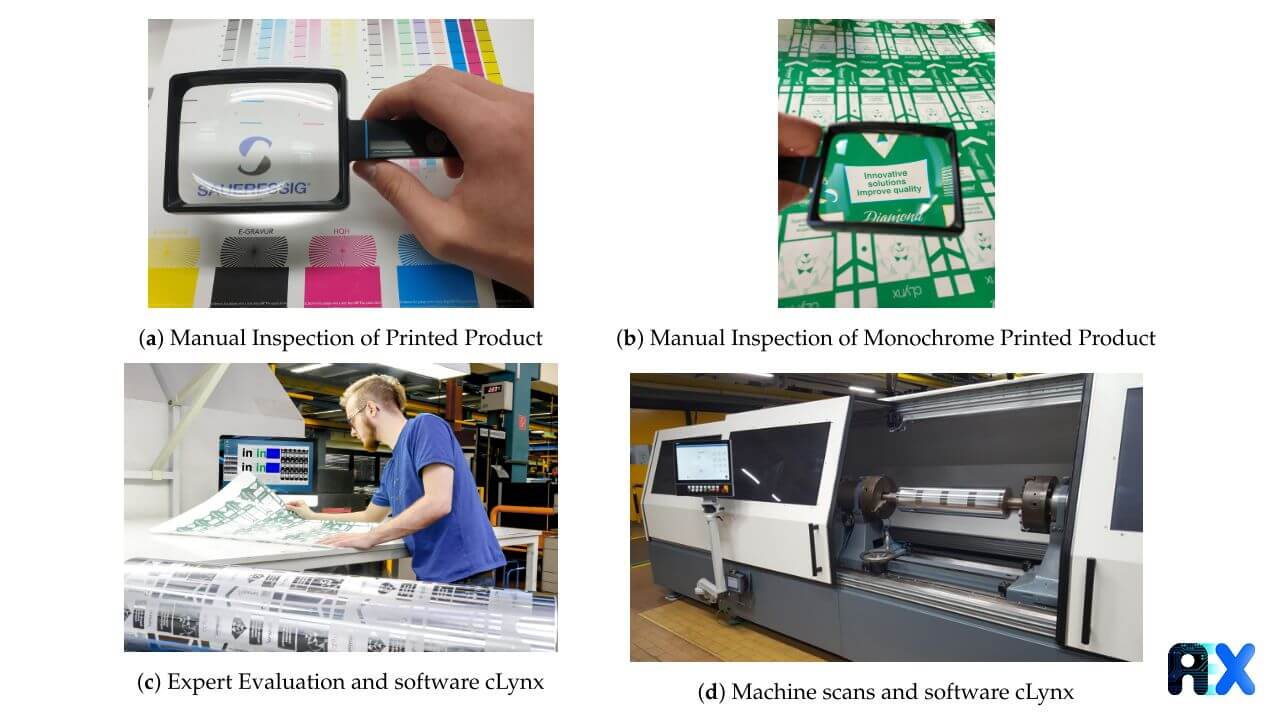

The Following stages indicate the evolution of OQC techniques, which finally lead to the implementation of automatic deep learning-based OQC:

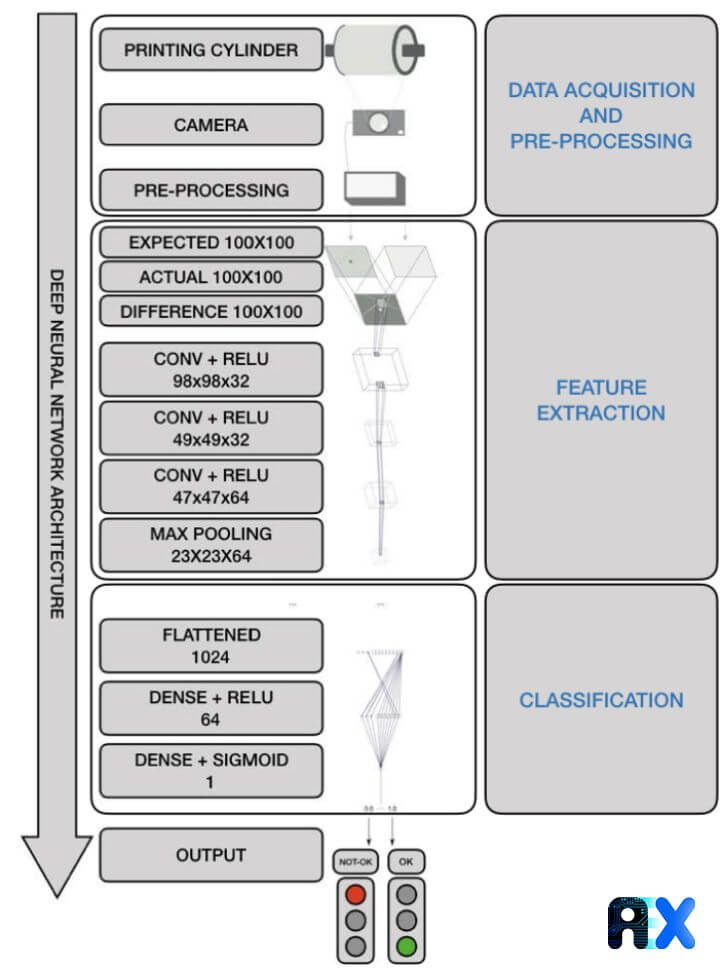

After intensive experiments implemented by the authors using various architectures such as DNN, restricted Boltzmann machines, deep belief networks, etc., A fully automated DNN architecture and configuration of different filter sizes, abstraction layers, etc. is selected. Immediately after scanning the cylinder, one can decide whether errors need to be corrected or not. Figure 2 depicts a schematic for the overall process of OQC using industrial CV in the printing industry 4.0.

The authors used a computer equipped with an Intel(R) Xeon(R) Gold 6154 3.00GHz CPU, an NVIDIA Quadro P4000 Graphic Processing Unit (GPU) with 96 GB of random-access memory (RAM), running a copy of Red Hat Linux 16.04 64-bit version to train the CV model. A Keras algorithm, interface for TensorFlow (Version 1.8) in Python (Version 2.7), is used to train and test the deep learning model. The TF.Learn module, a high-level Python module for distributed machine learning inside TensorFlow is employed to create, configure, train, and evaluate the DNN. Additional packages used were OpenCV for CV algorithms and image processing, Numpy for scientific computing and array calculation, and Matplotlib for displaying plots.

Image pre-processing consists of three steps:

(1) Determining an image size for DNN input and the convolutional window size used by the DNN,

(2) Brightness adjustment through histogram stretching,

(3) Automatic selection and labeling of images.

The image aspect ratio and the number of pixels should be determined. A mean aspect ratio of about 1.5 with a median of 1.0 is chosen by the authors. The image size is also optimized at 100 × 100 pixels.

Since many parameters affect the acquisition of the cylinder surface images, a similar brightness for the cylinder surface and engraved parts should be provided. For example, the brightness adjustment of an image is set so that 0.5% of all pixels will have a value of 0 or 255.

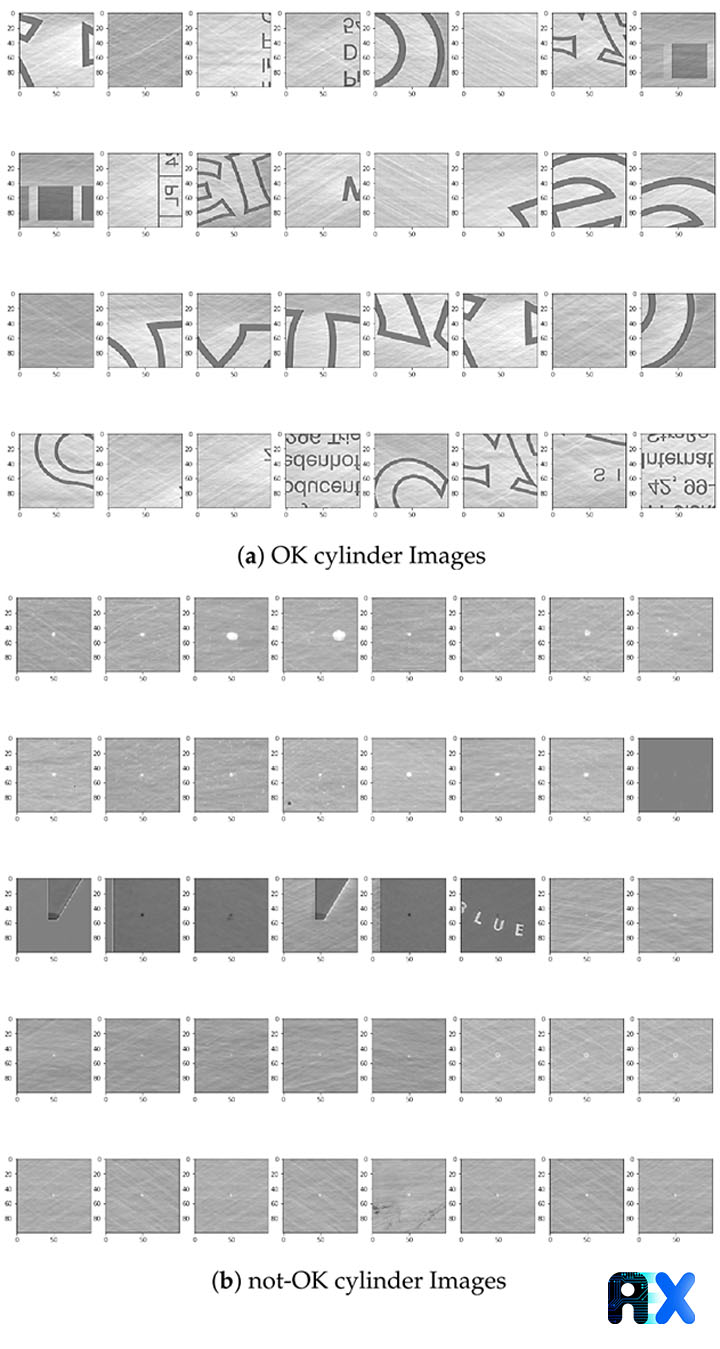

The images are then cut from the original file and categorized into two subgroups, OK-cylinder (Figure 3a) and not-OK-cylinder (Figure 3b). The errors may be very different in nature, which indicates that solving the challenges in training and testing the DNN model will be difficult. More details about how the authors addressed the issue can be found in the reference paper.

Classification and performance evaluation are performed by simple algorithms. For example, performance is evaluated by three convolution layers to compromise between the number of parameters and the images. Since soft sensors run in real-time, implementing reinforced learning encounters the real-time constraint.

a deep stack of alternatively fully connected convolutional and sub-sampling max-pooling layers are utilized for feature extraction. Convolution processes using activation functions extract features from the input information. A rectified linear unit (ReLu) is a function to zero out negative values and solves the vanishing gradient problem in training DNN. Max pooling includes extracting windows from the input feature maps and outputs the max value of each channel.

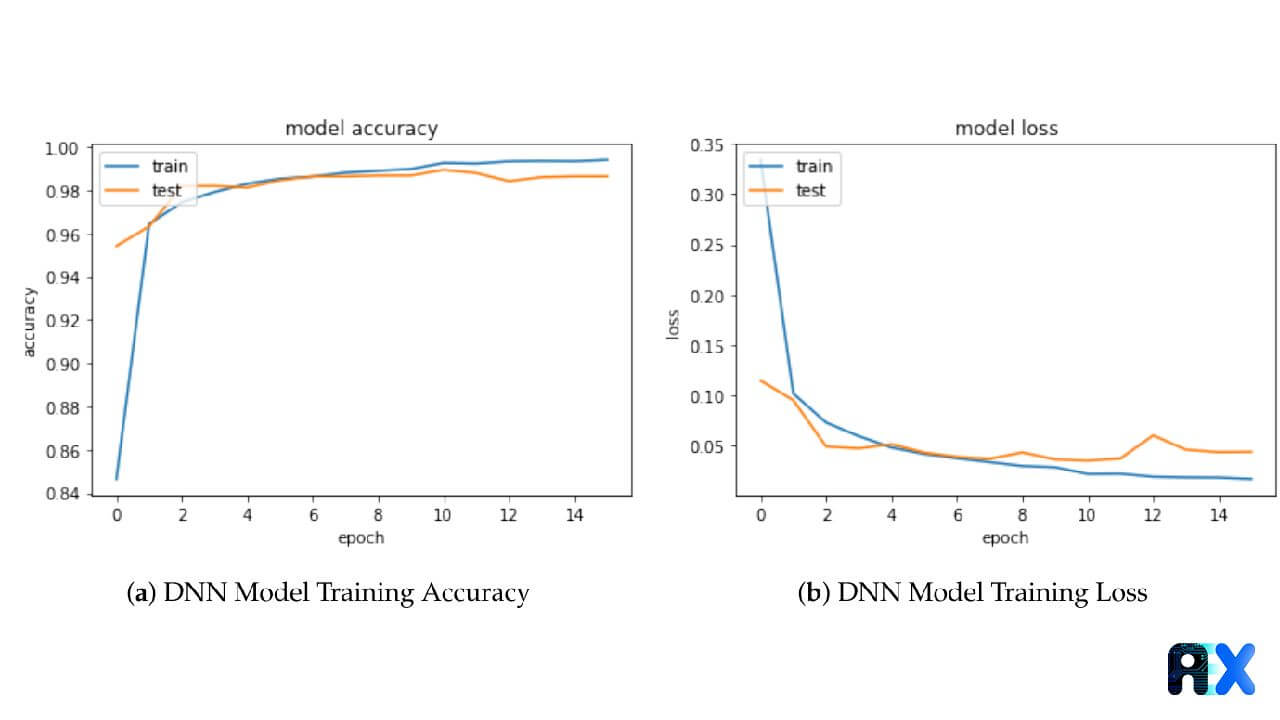

Some examples of classification models are LeNet, AlexNet, Network in Network, GoogLeNet, and DenseNet. To evaluate a probability distribution over the output classes, a binary-cross entropy loss function is used. The cross-entropy measures the distance between the ground-truth distribution and the predictions. A balanced dataset of 13,335 not-OK- and OK-cylinder images (in total, 26,670 images) is input for training (80%), validating (10%), and testing (10%) the model using Keras, Tensorflow backend for the DNN, and OpenCV/Numpy for the image manipulation. The dataset was collected in 14 months from almost 4000 cylinder scans. The data augmentation is performed by vertically and horizontally mirroring the training section, resulting in 85,344 training samples in total. Figures 4a, and b display the number of epochs vs. model accuracy and loss, respectively. Obviously, both accuracy and loss did not considerably change after epoch number 10.

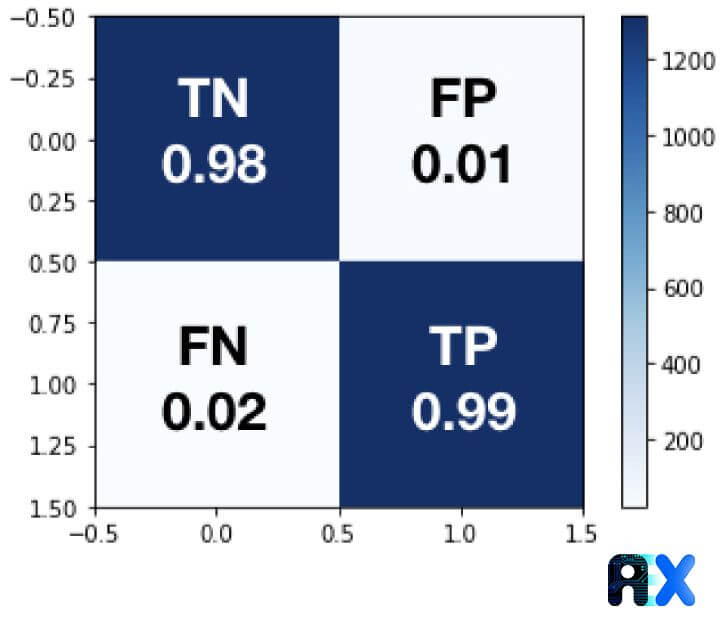

The confusion matrix combines contingency classes (TRUE, FALSE) to summarize the training results. This matrix consists of four categories:

(1) True Negative (TN)

(2) False Positive (FP)

(3) False Negative (FN)

(4) True Positive (TP)

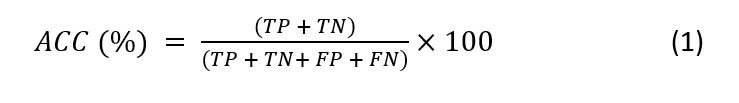

The accuracy (ACC) can be defined by the following expression:

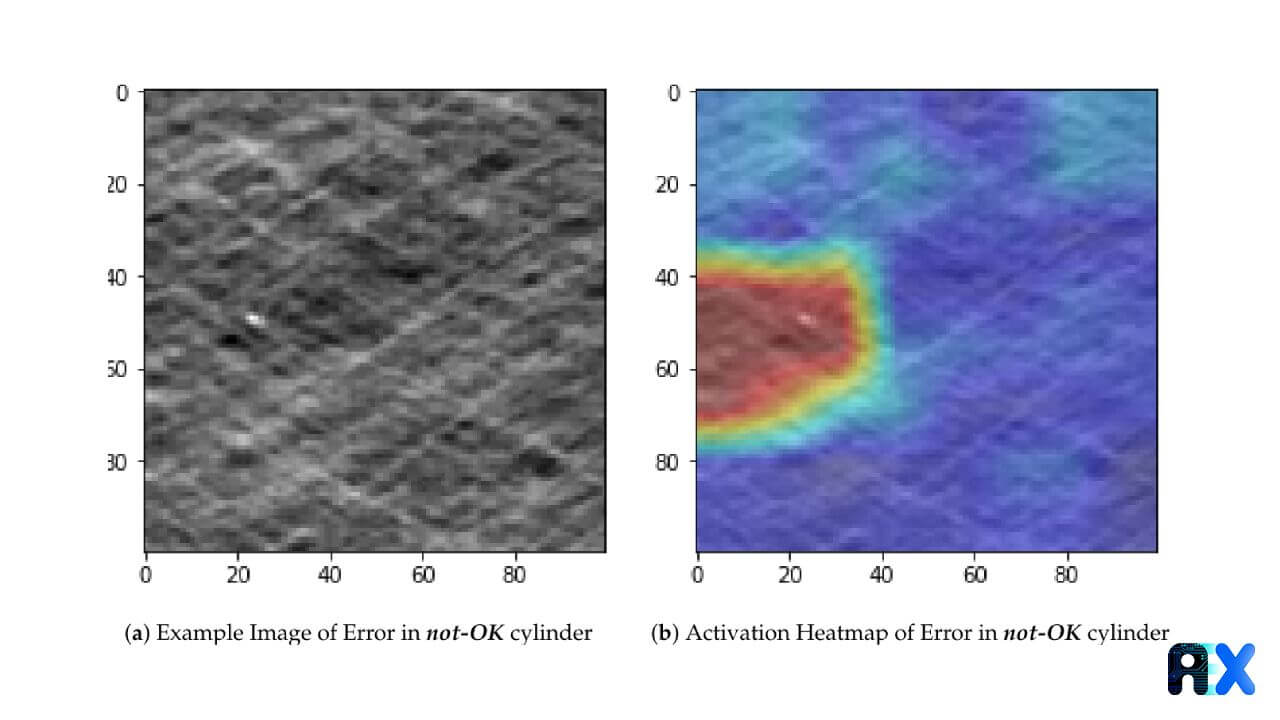

The visualization of what the DNN captures can be implemented by class activation heatmaps. Figure 5a shows an image of a not-OK cylinder. A class activation heatmap is a 2D grid of scores associated with a specific output class and is computed for every location in any input image, indicating how important each location is with respect to the class under consideration (Figure 5b).

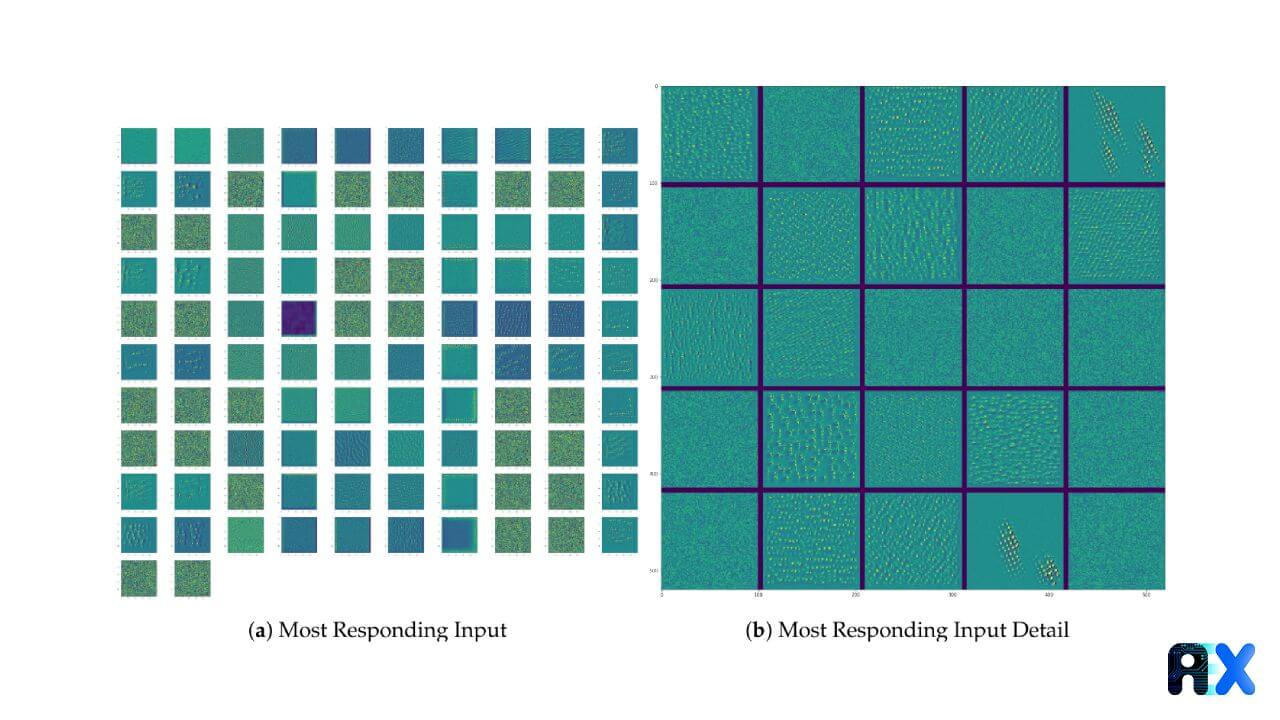

The highest response from a layer can also be calculated for an image. It can be done by applying gradient descent to the value of the input image of a convolutional network so as to maximize the response of a specific filter, as shown in Figure 6.

The ACC of 98.4% in fully automatic recognition of production errors is achieved. The levels of ACC can be observed in Figure 7 and is acceptable for such a complicated industrial classification problem.

Since the automation uses soft DNN sensors, not only the OQC costs can be extremely reduced, but the accuracy of error detection also increases significantly. The authors declared their approach is highly promising and paves the way for further industrial implementation.

If you need more information about how AIEX experts can help you and your business, please feel free to contact us.

You can enter your email address and subscribe to our newsletter and get the latest practical content. You can enter your email address and subscribe to our newsletter.

© 2022 Aiex.ai All Rights Reserved.