Contact Info

133 East Esplanade Ave, North Vancouver, Canada

Expansive data I/O tools

Extensive data management tools

Dataset analysis tools

Extensive data management tools

Data generation tools to increase yields

Top of the line hardware available 24/7

AIEX Deep Learning platform provides you with all the tools necessary for a complete Deep Learning workflow. Everything from data management tools to model traininng and finally deploying the trained models. You can easily transform your visual inspections using the trained models and save on tima and money, increase accuracy and speed.

High-end hardware for real-time 24/7 inferences

transformation in automotive industry

Discover how AI is helping shape the future

Cutting edge, 24/7 on premise inspections

See how AI helps us build safer workspaces

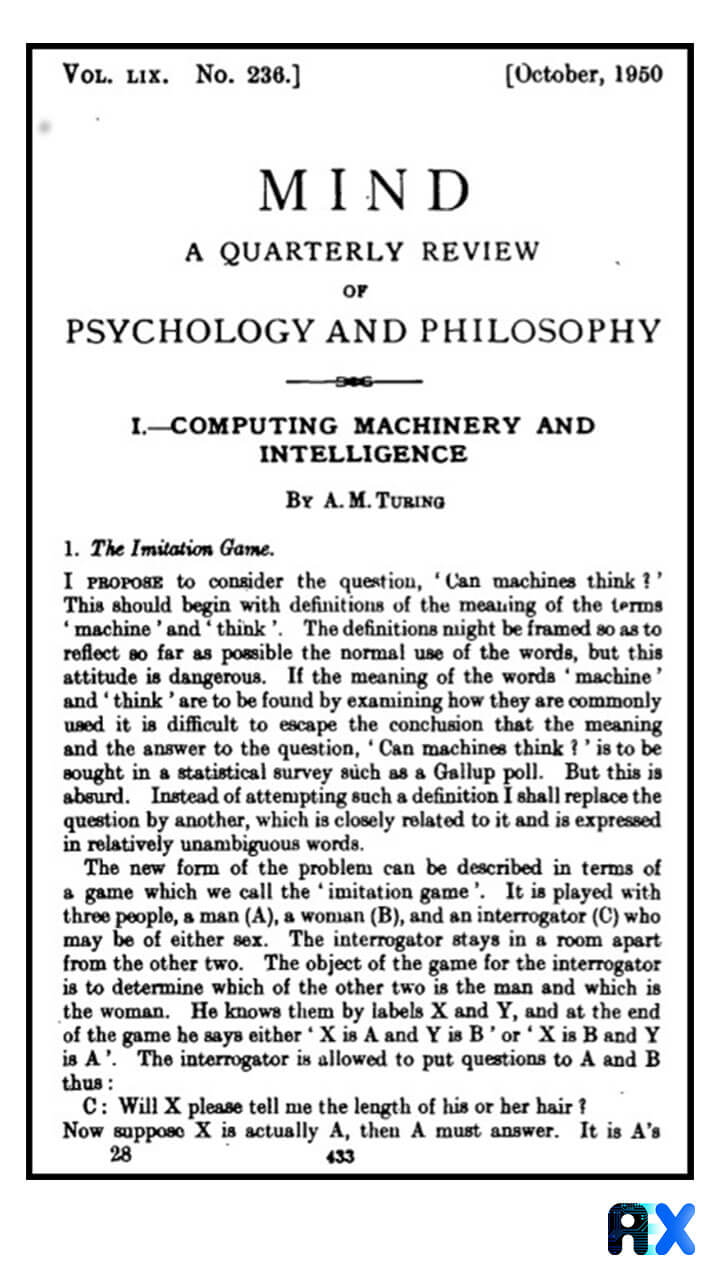

Machine learning and artificial intelligence are familiar terms in technology and have even been called the fourth industrial revolution. Contrary to the common misconception, artificial intelligence is not a new technology. The concept of artificial intelligence was born in 1950 when Alan Turing wrote his “Computing Machinery and Intelligence”, where the now famous “Turing Test” article was featured.

Turing started the article with an interesting question. The foundation of current artificial intelligence and what we will have in the future stems from this question, which was no more than a simple question: “Could a computer think?“.

Perhaps Turing didn’t realize how profoundly this simple question would change the world. As a simple definition, we can think of artificial intelligence as the smartness of machines, judged by a human or natural intelligence.

But the important thing about machines becoming intelligent is that a machine can perform the cognitive functions of the human brain. In other words, machines can learn from experience and solve new problems. Based on this definition, not every computer program or machine executing predetermined commands is intelligent; A machine or a program is using artificial intelligence if it can undertake tasks in a manner similar to humans. But how can we tell if a machine has reached the intelligence level of a human? To answer this question, we must refer back to Turing’s question: “Could a computer think?”. Alan Turing proposes a test known as the Turing Test. The purpose of this test was not to test the machine’s intelligence but to test the judge’s judgment. A judge, a male volunteer, and a female volunteer are required to perform this test. This test was designed for an arbitrator who is in contact with the volunteers individually and through the system, to distinguish between male and female volunteers. If the female contestant spoke in a way that the judge recognized her as male, the female contestant would win. But what does this have to do with artificial intelligence? To distinguish between a human and a machine, Alan Turing proposed replacing the female volunteer with an intelligent machine (artificial intelligence). This method of evaluation posits that if artificial intelligence is capable of deceiving a judge in more than half of the questions and causes a mistake in judgment, we conclude that the computer has surpassed the human mind and is the winner of the competition; in other words, the machine has artificial intelligence. The computers from Turing’s era did not have a way to store instructions and did not have much processing power, which would have prevented Turing from performing his test.

Five years later, Herbert Simon and Alan Newell developed Logic Theorist, a software program that mimics human problem-solving abilities. Between 1957 and 1974 as computers’ processing power along with information storage improved, and the cost of using them decreased significantly, AI entered a new era. As a result of advances in algorithmic design and the favorable opinions of leading researchers, organizations and governments increased their investment in AI. There were no significant achievements in artificial intelligence during the mid-70s, and the technology was still far from what we know as human intelligence. One of the reasons for this stagnation was computers’ subpar processing power. Governments and organizations gradually reduced investment in related projects, and research remained dormant to a large extent causing this period to be known as the winter of artificial intelligence.

Five years later, Herbert Simon and Alan Newell developed Logic Theorist, a software program that mimics human problem-solving abilities. Between 1957 and 1974 as computers’ processing power along with information storage improved, and the cost of using them decreased significantly, AI entered a new era. As a result of advances in algorithmic design and the favorable opinions of leading researchers, organizations and governments increased their investment in AI. There were no significant achievements in artificial intelligence during the mid-70s, and the technology was still far from what we know as human intelligence. One of the reasons for this stagnation was computers’ subpar processing power. Governments and organizations gradually reduced investment in related projects, and research remained dormant to a large extent causing this period to be known as the winter of artificial intelligence.

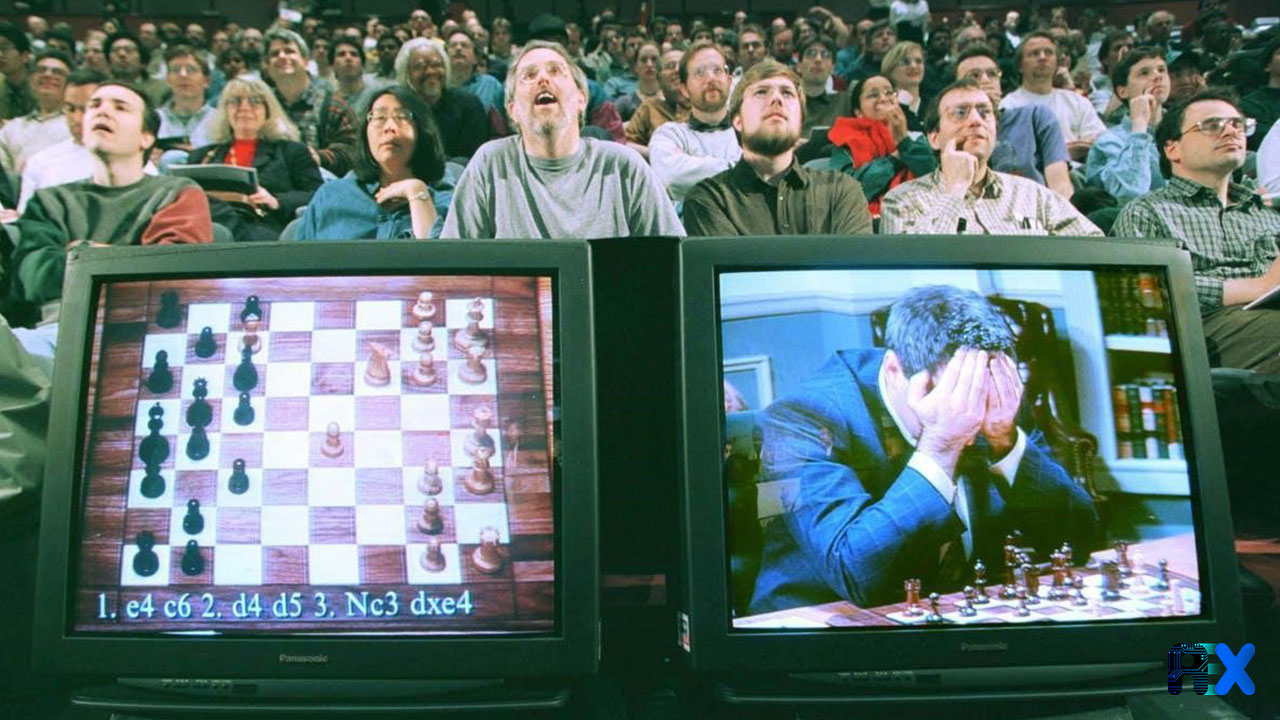

The winter lasted until the mid-1980s, when the expansion of algorithmic and computer tools and the increase in research budgets caused a renewed interest in AI. Various electronics technologies, such as MOS and VLSI paved the way for the development of artificial neural networks. Even though many projects failed during this time, AI was a hot topic again and using machines for intelligent activities has fast become a trend. In the 1990s and at the dawn of the 21st century AI’s applications in the field of medical diagnostics were developed and its extraordinary capabilities gradually became evident. Chess grandmaster Garry Kasparov’s 1997 defeat against IBM’s artificial intelligence Deep Blue was a turning point for AI, by this time it was undeniably apparent that machines have surpassed human intelligence. In the early 21st century, artificial intelligence was used in more and more fields such as statistics, economics, and mathematics, marking the beginning of a new era for computer automation.

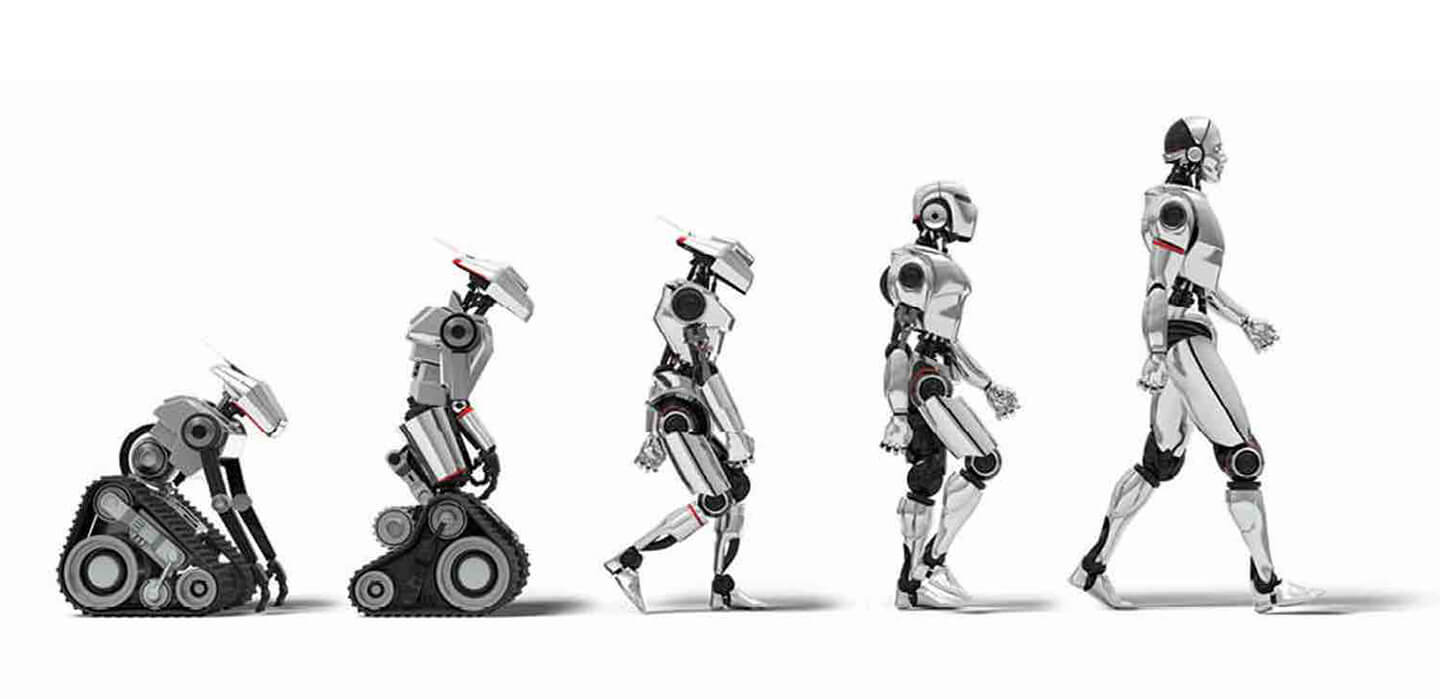

There is no doubt that the 2010s were the golden years of artificial intelligence, the effects of the rapid advancements were gradually seen in various tools and equipment and ultimately in our everyday lives. With the advancement of processors, and the Internet’s ability to produce large quantities of data in 2013, progress has doubled in the field. Now new types of artificial intelligence capable of analyzing and solving more complex problems and creating new types of artificial intelligence have been developed. Artificial intelligence and machine learning are so intertwined with our lives these days that few people have never heard of them or are unfamiliar with them. Today, artificial intelligence finds its place in various fields of science and industry and penetrates every corner of our lives. By solving new problems, artificial intelligence has brought significant progress to human societies, to the extent where it’s justified to call artificial intelligence, the fourth industrial revolution.

1. Haack, S. (n.d.). A BRIEF HISTORY OF ARTIFICIAL INTELLIGENCE. Retrieved from https://www.atariarchives.org/deli/artificial_intelligence.php.

2. Turing, A. M. (1937). On computable numbers, with an application to the Entscheidungsproblem. Proceedings of the London mathematical society, 2(1), 230–265.

3. Artificial Intelligence. (2010) Did you know?. Retrieved from https://didyouknow.org/ai/.

4. Hodges, A. (n.d.). Computable Numbers and the Turing Machine, 1936. The Alan Turing Internet Scrapbook, Retrieved from http://www.turing.org.uk/scrapbook/machine.html.

5. Copeland, B. J. (Ed.). (2004). The Essential Turing: Seminal Writings in Computing, Logic, Philosophy, Artificial Intelligence, and Artificial Life plus The Secrets of Enigma. Oxford: Clarendon Press.

6. Turing, A. (1948). “Intelligent Machinery”. In B. J. Copeland (Ed.). (2004). The Essential Turing: Seminal Writings in Computing, Logic, Philosophy, Artificial Intelligence, and Artificial Life plus The Secrets of Enigma. Oxford: Clarendon Press.

7. Copeland, J. (n.d.). AlanTuring.net: The Turing Archive for the History of Computing, Retrieved from http://www.alanturing.net/.

8. King’s College (Cambridge). (n.d.). The Turing Digital Archive, Retrieved from http://www.turingarchive.org.

9. Carpenter, B. E. and Doran R. W. (1977). The other Turing machine. The Computer Journal. Vol. 20(3).

10. Evans, C. R. and Robertson, A. D. J. (Eds.). (1968) Key Papers: Cybernetics. London: Butterworths.

11. Copeland, B. J. (2005). Alan Turing’s Automatic Computing Engine: The Master Codebreaker’s Struggle to Build the Modern Computer. New York: Oxford University Press.

12. Copeland, B. J. (Ed.). (2012). Alan Turing’s Electronic Brain: The Struggle to Build the ACE, the World’s Fastest Computer. New York: Oxford University Press.

13. Katsuhiko, S. and M. Sugimoto (2017). “From Computing Machines to Learning Intelligent Machines: Chronological Development of Alan Turing’s Thought on Machines”. In Understanding Information: From the Big Bang to Big Data. (A. J. Schuster) Cham: Springer Nature.

You can enter your email address and subscribe to our newsletter and get the latest practical content. You can enter your email address and subscribe to our newsletter.

© 2022 Aiex.ai All Rights Reserved.